Simio and Simulation: Modeling, Analysis, Applications - 7th Edition

Chapter 4 First Model

The primary goal of this chapter is to introduce the simulation model-building process using Simio. Hand-in-hand with simulation-model building goes the statistical analysis of simulation output results, so as we build our models we’ll also exercise and analyze them to see how to make valid inferences about the system being modeled. The chapter first will build a complete Simio model and introduce the concepts of model verification, experimentation, and statistical analysis of simulation output data. Although the basic model-building and analysis processes themselves aren’t specific to Simio, we’ll focus on Simio as an implementation vehicle.

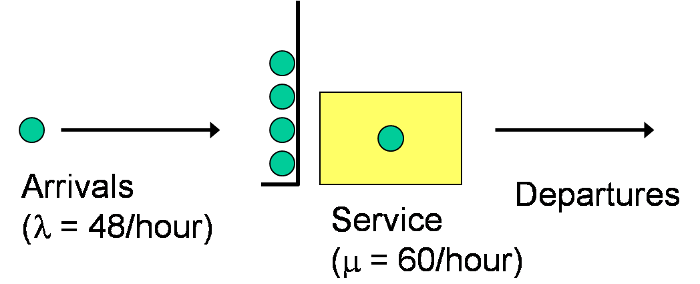

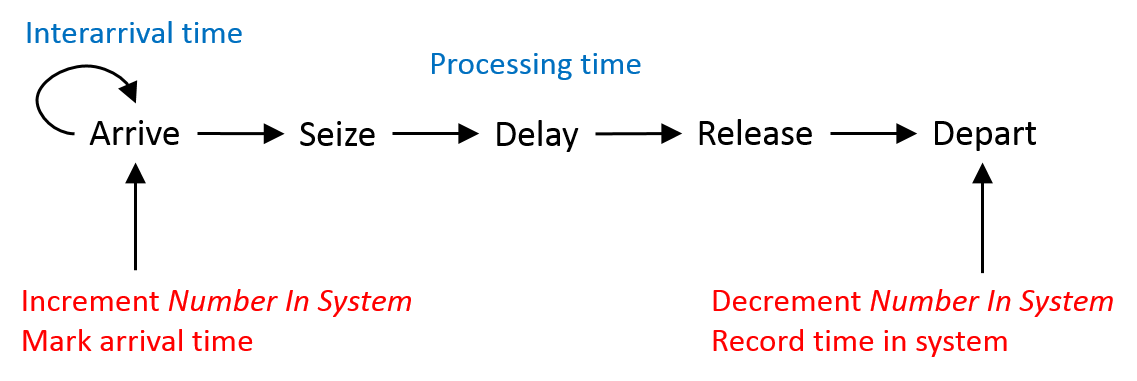

The initial model used in this chapter is very simple, and except for run length is basically the same as Model 3-4 done manually in Section 3.3.1 and Model 3-5 in a spreadsheet model in Section 3.3.2. This model’s familiarity and simplicity will allow us to focus on the process and the fundamental Simio concepts, rather than on the model. We’ll then make some easy modifications to the initial model to demonstrate additional Simio concepts. Then, in subsequent chapters we’ll successively extend the model to incorporate additional Simio features and simulation-modeling techniques to support more comprehensive systems. This is a simple single-server queueing system with arrival rate \(\lambda=48\) entities/hour and service rate \(\mu=60\) entities/hour (Figure 4.1).

Figure 4.1: Example single-server queueing system.

This system could represent a machine in a manufacturing system, a teller at a bank, a cashier at a fast-food restaurant, or a triage nurse at an emergency room, among many other settings. For our purposes, it really doesn’t matter what is being modeled — at least for the time being. Initially, assume that the arrival process is Poisson (i.e., the interarrival times are exponentially distributed and independent of each other), the service times are exponential and independent (of each other and of the interarrival times), the queue has infinite capacity, and the queue discipline will be first-in first-out (FIFO). Our interest is in the typical queueing-related metrics such as the number of entities in the queue (both average and maximum), the time an entity spends in the queue (again, average and maximum), utilization of the server, etc. If our interest is in long-run or steady-state behavior, this system is easily analyzed using standard queueing-analysis methods (as described in Chapter 2), but our interest here is in modeling this system using Simio.

This chapter actually describes two alternative methods to model the queuing system using Simio. The first method uses the Facility Window and Simio objects from the Standard Library (Section 4.2) . The second method uses Simio Processes (Section 4.3) to construct the model at a lower level, which is sometimes needed to model things properly or in more detail. These two methods are not completely separate — the Standard Library objects are actually built using Processes. The pre-built Standard-Library objects generally provide a higher-level, more natural interface for model building, and combine animation with the basic functionality of the objects. Custom-constructed Processes provide a lower-level interface to Simio and are typically used for models requiring special functionality or faster execution. In Simio, you also have access to the Processes that comprise the Standard Library objects, but that’s a topic for a future chapter.

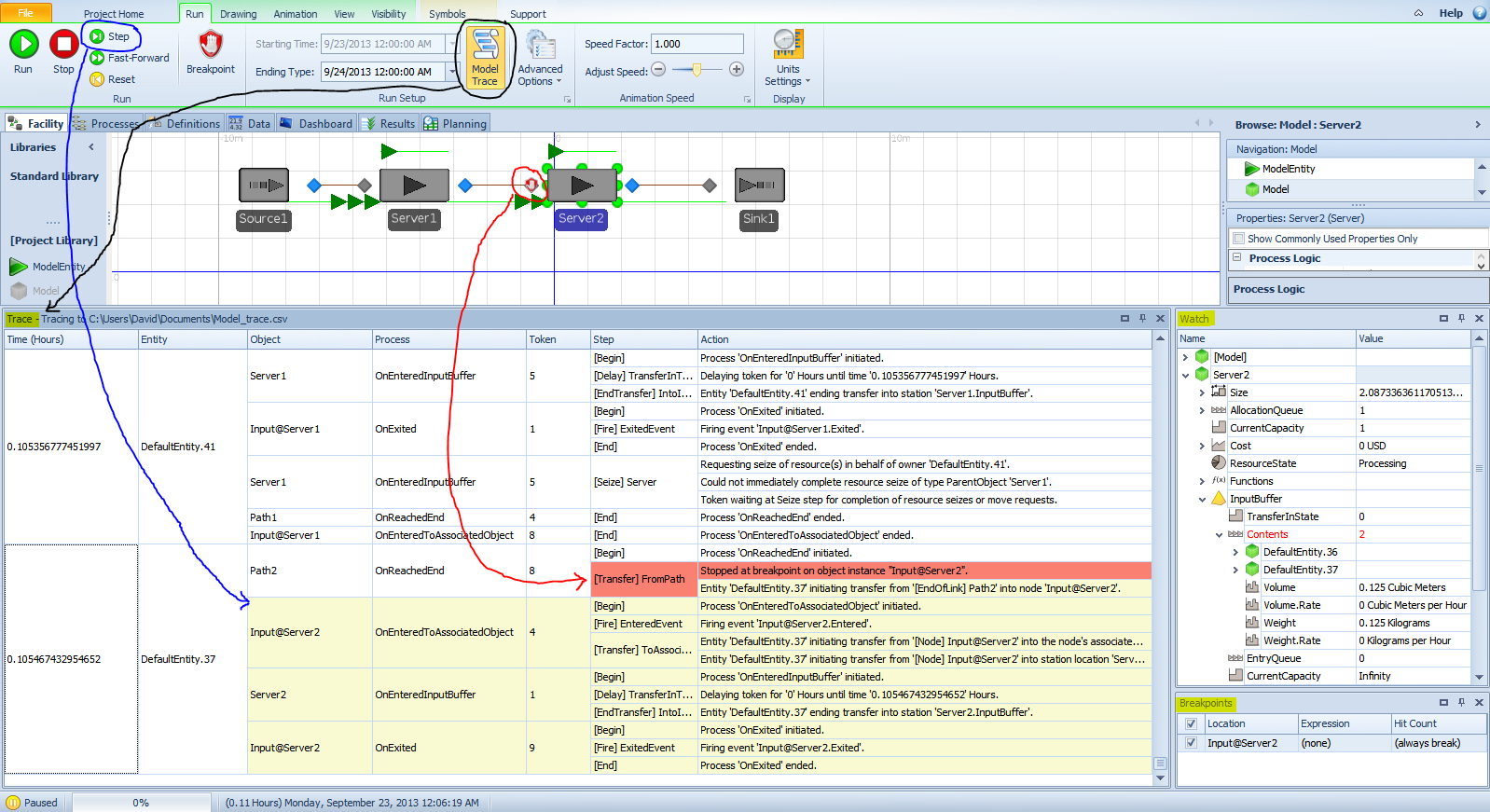

The chapter starts with a tour around the Simio window and user interface in Section 4.1. As mentioned above, Section 4.2 guides you through how to build a model of the system in the Facility Window using the Standard Library objects. We then experiment a little with this model, as well as introduce the important concepts of statistically independent replications, warm-up, steady-state vs. terminating simulations, and verify that our model is correct. Section 4.3 re-builds the first model with Simio Processes rather than objects. Section 4.4 adds context to the initial model and modifies the interarrival and service-time distributions. Sections 4.5 and 4.6 show how to use innovative approaches enabled by Simio for effective statistical analysis of simulation output data. Section 4.8 describes the basic Simio animation features and adds animation to the models. As your models start to get more interesting you will start finding unexpected behavior. So we will end this chapter with Section 4.9 describing the basic procedure to find and fix model problems. Though the systems being modeled in this chapter are quite simple, after going through this material you should be well on your way to understanding not only how to build models in Simio, but also how to use them.

4.1 The Basic Simio User Interface

Before we start building Simio models, we’ll take a quick tour in this section through Simio’s user interface to introduce what’s available and how to navigate to various modeling components.

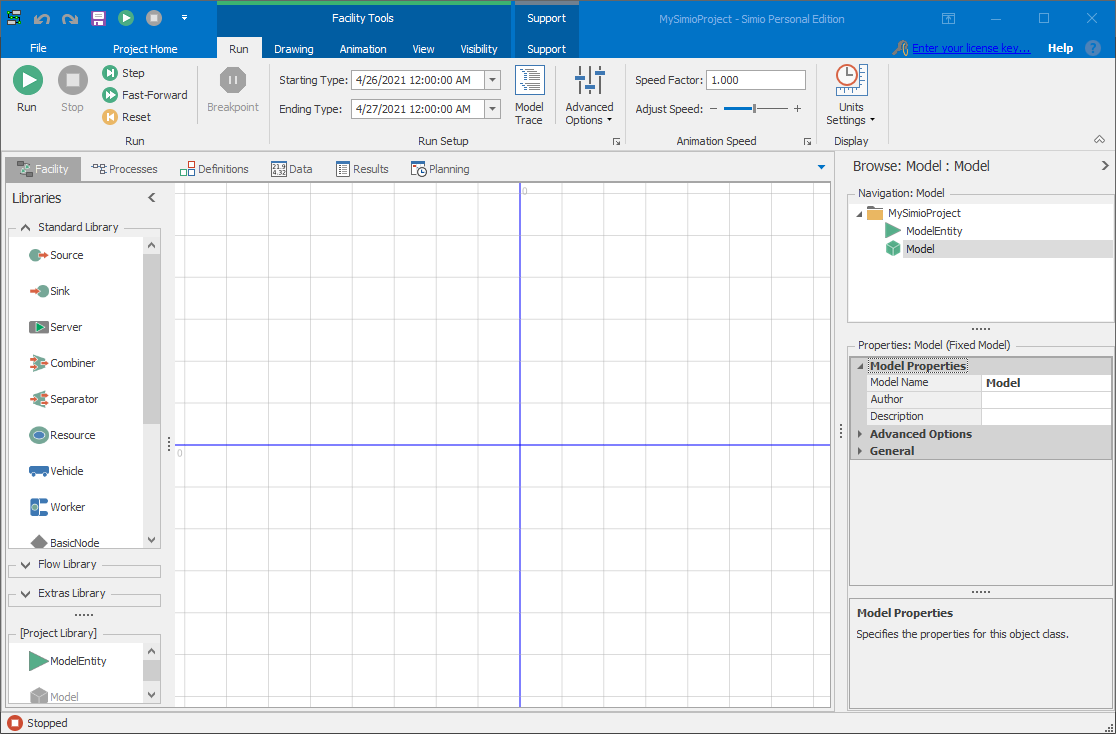

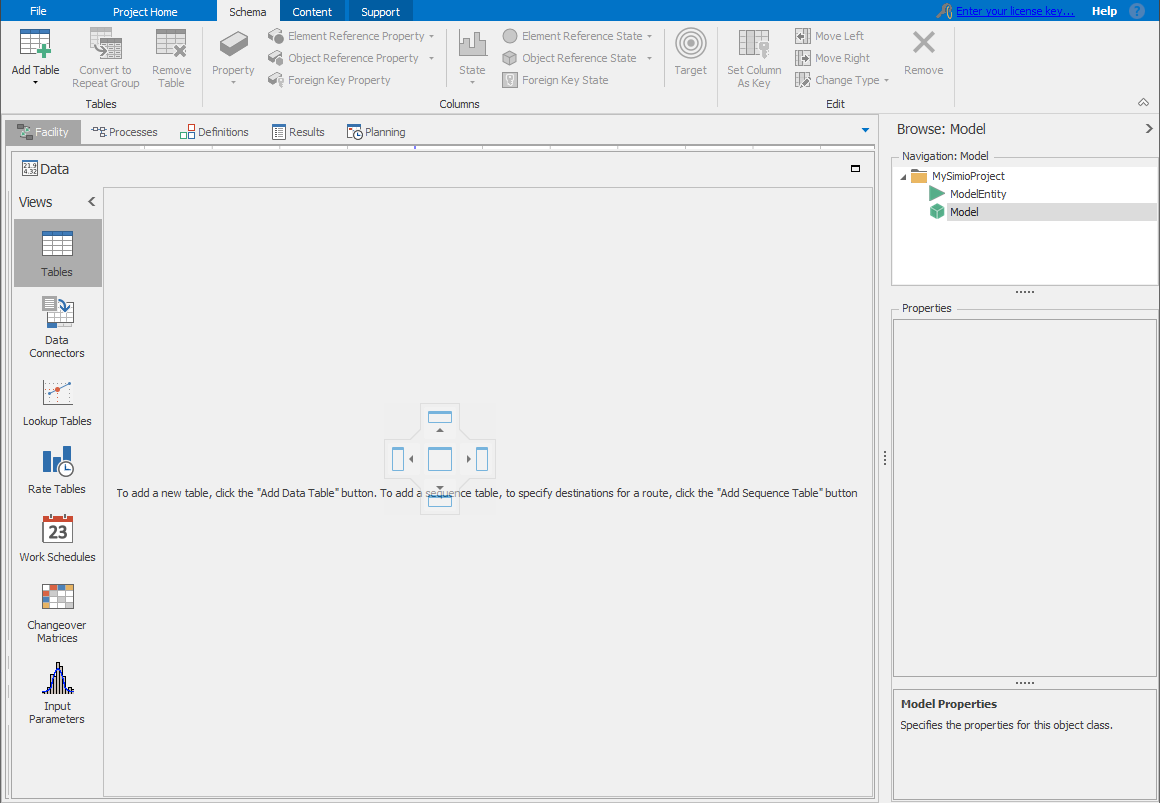

When you first load Simio you’ll see either a new Simio model — the default behavior — or the most recent model that you had previously opened if you have the Load most recent project at startup checkbox checked on the File page. Figure 4.2 shows the default initial view of a new Simio model. Although you may have a natural inclination to start model building immediately, we encourage you to take time to explore the interface and the Simio-related resources provided through the Support ribbon (described below). These resources can save you an enormous amount of time.

Figure 4.2: Facility window in the new model.

4.1.1 Ribbons

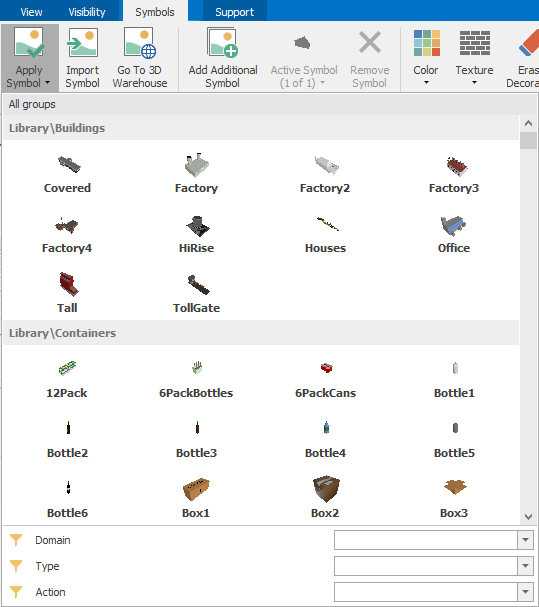

Ribbons are the innovative interface components first introduced with Microsoft \(^{TM}\) Office 2007 to replace the older style of menus and toolbars. Ribbons help you quickly complete tasks through a combination of intuitive organization and automatic adjustment of contents. Commands are organized into logical groups, which are collected together under tabs. Each tab relates to a type of activity, such as running a model or drawing symbols. Tabs are automatically displayed or brought to the front based on the context of what you’re doing. For example, when you’re working with a symbol, the Symbols tab becomes prominent. Note that which specific ribbons are displayed depends on where you are in the project (i.e., what items are selected in the various components of the interface).

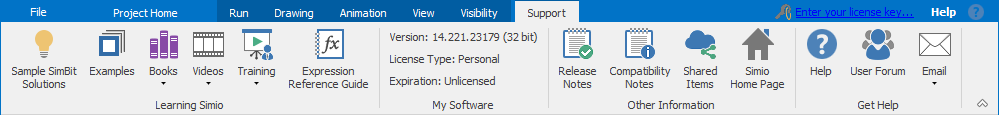

4.1.2 Support Ribbon

The Simio Support ribbon (see Figure 4.3) includes many of the resources available to learn and get the most out of Simio, as well as how to contact the Simio people with ideas, questions, or problems. Additional information is available through the link to Simio Technical Support (http://www.simio.com/resources/technical-support/) where you will find a description of the technical-support policies and links to the Simio User Forum and other Simio-related groups. Simio version and license information is also available on the Support ribbon. This information is important whenever you contact Support.

Figure 4.3: Simio Support ribbon..

Simio includes comprehensive help available at the touch of the F1 key or the ? icon in the upper right of the Simio window. If you prefer a printable version, you’ll find a link to the Simio Reference Guide (a .pdf file). The help and reference guides provide an indexed searchable resource describing basic and advanced Simio features. For additional training opportunities you’ll also find links to training videos and other on-line resources. The Support ribbon also has direct links to open example projects and SimBits (covered below), and to access Simio-related books, release and compatibility nodes, and the Simio user forum.

4.1.3 Project Model Tabs

In addition to the ribbon tabs near the top of the window, if you have a Simio project open, you’ll see a second set of tabs just below the ribbon. These are the project model tabs used to select between multiple windows that are associated with the active model or experiment. The windows that are available depend on the object class of the selected model, but generally include Facility, Processes, Definitions, Data, and Results. If you are using an RPS Simio license, you will also see the Planning tab. Each of these will be discussed in detail later, but initially you’ll spend most of your time in the Facility Window where the majority of model development, testing, and interactive runs are done.

4.1.4 Object Libraries

Simio object libraries are collections of object definitions, typically related to a common modeling domain or theme. Here we give a brief introduction to Libraries — Section \(\ref{sec-what-is-object}\) provides additional details about objects, libraries, models and the relationships between them. Libraries are shown on the left side of the Facility Window. In the standard Simio installation, the Standard Library, the Flow Library, and the Extras Libary are attached by default and the Project Library is an integral part of the project. The Standard, Flow, and Extras libraries can be opened by clicking on their respective names at the bottom of the libraries window (only one can be open at a time). The Project Library remains open and can be expanded/condensed by clicking and dragging on the .... separator. Other libraries can be added using the Load Library button on the Project Home ribbon.

The Standard Object Library on the left side of the Facility Window is a general-purpose set of objects that comes standard with Simio. Each of these objects represents a physical object, device, or item that you might find if you looked around a facility being modeled. In many cases you’ll build most of your model by dragging objects from the Standard Library and dropping them into your Facility Window. Table 4.1 lists the objects in the Simio Standard Library.

| Object | Description |

|---|---|

| Source | Generates entities of a specified type and arrival pattern. |

| Sink | Destroys entities that have completed processing in the model. |

| Server | Represents a capacitated process such as a machine or service operation. |

| Combiner | Combines multiple entities together with a parent entity (e.g., a pallet). |

| Separator | Splits a batched group of entities or makes copies of a single entity. |

| Resource | A generic object that can be seized and released by other objects. |

| Vehicle | A transporter that can follow a fixed route or perform on-demand pickups/dropoffs. |

| Worker | Models activities associated with people. Can be used as a moveable object or a transporter and can follow a shift schedule. |

| BasicNode | Models a simple intersection between multiple links. |

| TransferNode | Models a complex intersection for changing destination and travel mode. |

| Connector | A simple zero-time travel link between two nodes. |

| Path | A link over which entities may independently move at their own speeds. |

| TimePath | A link that has a specified travel time for all entities. |

| Conveyor | A link that models both accumulating and non-accu-mulating conveyor devices. |

The Project Library includes the objects defined in the current project. As such, any new object definitions created in a project will appear in the Project Library for that project. Objects in the Project Library are defined/updated via the Navigation Window (described below) and they are used (placed in the Facility Window) via the Project Library. In order to simplify modeling, the Project Library is pre-populated with a ModelEntity object. The Flow Library includes a set of objects for modeling flow processing systems and the Extras Library includes a set of material handling and warehouse-related objects. Refer to the Simio Help for more information on the use of these libraries. Other domain-specific libraries are available on the Simio User Forum and can be accessed using the Shared Items button on the Support ribbon. The methods for building your own objects and libraries will be discussed in Chapter 11.

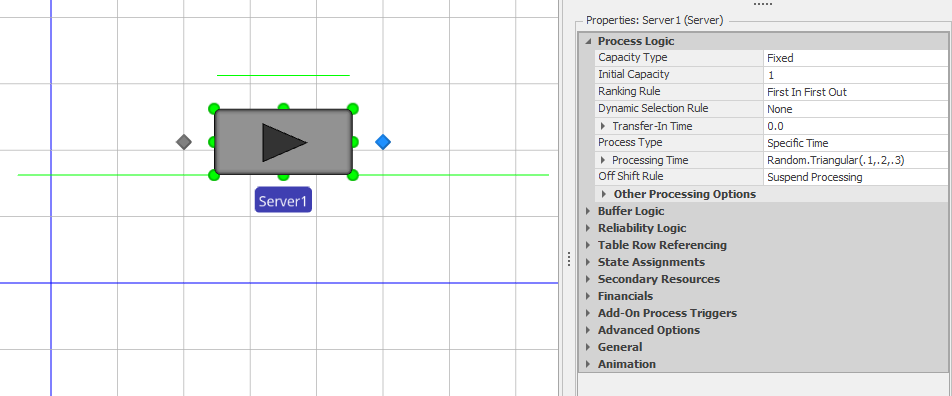

4.1.5 Properties Window

The Properties Window on the lower right side displays the properties (characteristics) of any object or item currently selected. For example, if a Server has been placed in the Facility Window, when it’s selected you’ll be able to display and change its properties in the Properties Window (see Figure 4.4. The gray bars indicate categories or groupings of similar properties. By default the most commonly changed categories are expanded so you can see all the properties. The less commonly changed categories are collapsed by default, but you can expand them by clicking on the + sign to the left. If you change a property value it will be displayed in bold and its category will be expanded to make it easy to discern changes from default values. To return a property to its default value, right click on the property name and select Reset.

Figure 4.4: Properties for the Server object.

4.1.7 SimBits

One feature you’ll surely want to exploit is the SimBits collection. SimBits are small, well-documented models that illustrate a modeling concept or explain how to solve a common problem. The full documentation for each can be found in an accompanying automatically loaded .pdf file, as well as in the on-line help. Although they can be loaded directly from the Open menu item (replacing the currently open model), perhaps the best way to find a helpful SimBit is to look for the SimBit button on the Support ribbon. On the target page for this button you will find a categorized list of all of the SimBits with a filtering mechanism that lets you quickly find and load SimBits of interest (in this case, loading into a second copy of Simio, preserving your current workspace). SimBits are a helpful way to learn about new modeling techniques, objects, and constructs.

4.1.8 Moving/Configuring Windows and Tabs

The above discussions refer to the default window positions, but some window positions are easily changed. Many design-time and experimentation windows and tabs (for example the Process window or individual data table tabs) can be changed from their default positions by either right-clicking or dragging. While dragging, you’ll see two sets of arrows called layout targets appear: a set near the center of the window and a set near the outside of the window. For example Figure 4.5 illustrates the layout targets just after you start dragging the tab for a table. Dropping the table tab onto any of the arrows will cause the table to be displayed in a new window at that location.

Figure 4.5: Dragging a tabbed window to a new display location.

You can arrange the windows into vertical and horizontal tab groups by right clicking any tab and selecting the appropriate option. You can also drag some windows (Search, Watch, Trace, Errors, and object Consoles) outside of the Simio application, even to another monitor, to take full advantage of your screen real estate. If you ever regret your custom arrangement of the windows or you lose a window (that is, it should be displayed but you can’t find it), use the Reset button on the Project Home ribbon to restore the default window configuration.

4.2 Model 4-1: First Project Using the Standard Library Objects

In this section we’ll build the basic model described above in Simio, and also do some experimentation and analysis with it, as follows: Section 4.2.1 takes you through how to build the model in Simio, in what’s called the Facility Window using the Standard Library, run it (once), and look through the results. Next, in Section 4.2.2 we’ll use it to do some initial informal experimentation with the system to compare it to what standard queueing theory would predict. Section 4.2.3 introduces the notions of statistically replicating and analyzing the simulation output results, and how Simio helps you do that. In Section 4.2.4 we’ll talk about what might be roughly described as long-run vs. short-run simulations, and how you might need to warm up your model if you’re interested in how things behave in the long run. Section 4.2.5 revisits some of the same questions raised in Section 4.2.2, specifically trying to verify that our model is correct, but now we are armed with better tools like warm-up and statistical analysis of simulation output data. All of our discussion here is for a situation when we have only one scenario (system configuration) of interest; we’ll discuss the more common goal of comparing alternative scenarios in Sections 5.5 and 9.1.1, and will introduce some additional statistical tools in those sections for such goals.

4.2.1 Building the Model

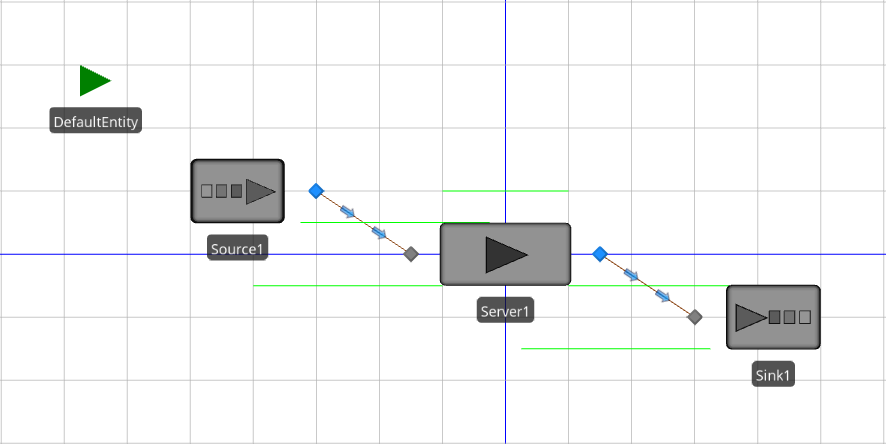

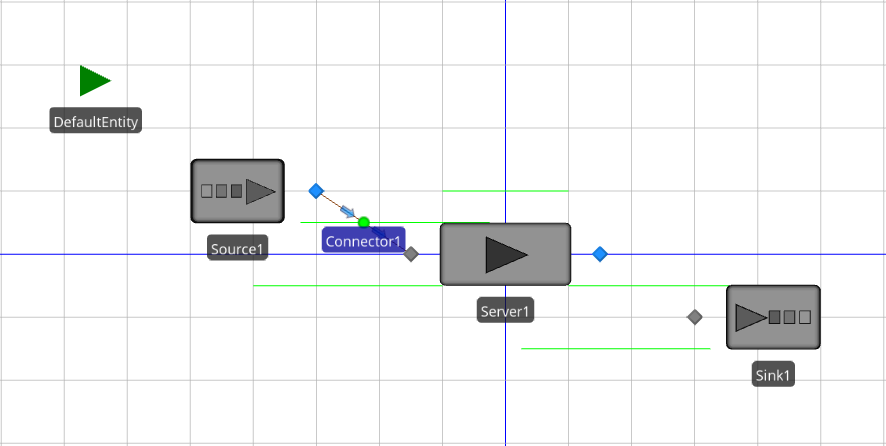

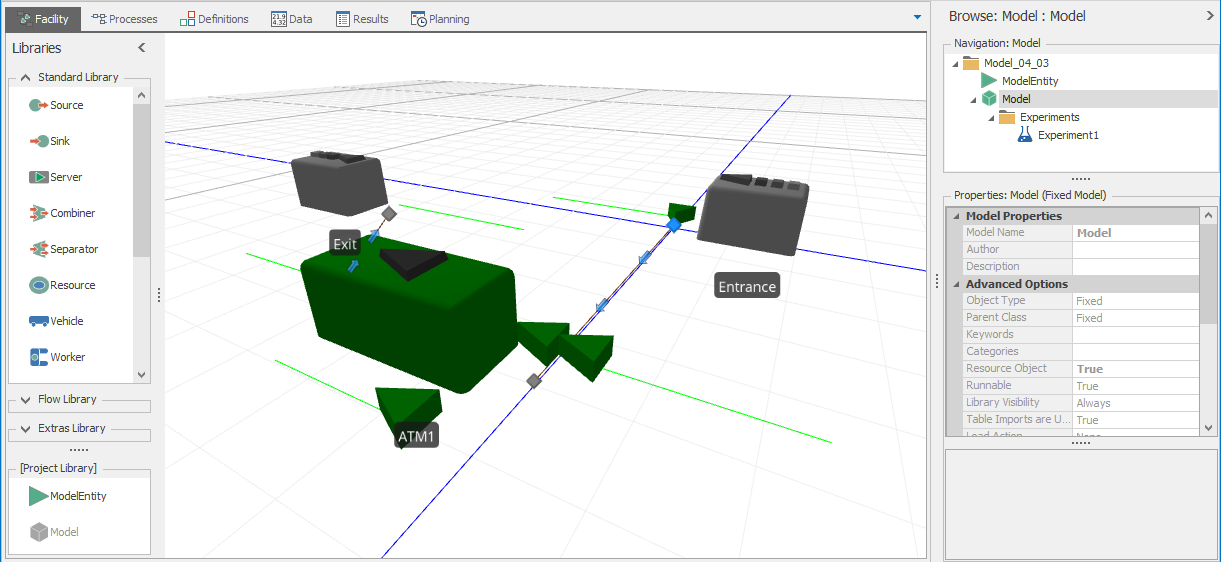

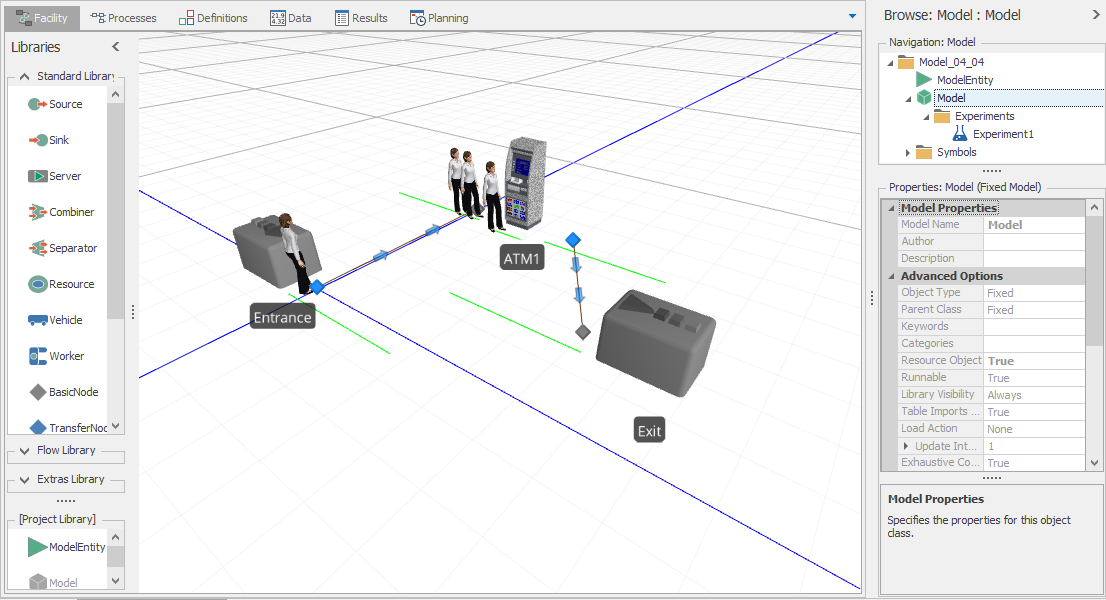

Using Standard Library objects is the most common method for building Simio models. These pre-built objects will be sufficient for many common types of models. Figure 4.6 shows the completed model of our queueing system using Simio’s Facility Window (note that the Facility tab is highlighted in the Project Model Tabs area). We’ll describe how to construct this model step by step in the following paragraphs.

Figure 4.6: Completed Simio model (Facility Window) of the single-server queueing system — Model 4-1.

The queueing model includes entities, an entity-arrival process, a service process, and a departure process. In the Simio Facility Window, these processes can be modeled using the Source, Server, and Sink objects. To get started with the model, start the Simio application and, if necessary, create a new model by clicking on the New item in the File page (accessible from the File ribbon). Once the default new model is open, make sure that the Facility Window is open by clicking on the Facility tab, and that the Standard Library is visible by clicking on the Standard Library section heading in the Libraries bar on the left; Figure 4.2 illustrates this. First, add a ModelEntity object by clicking on the ModelEntity object in the Project Library panel, then drag and drop it onto the Facility Window (actually, we’re dragging and dropping an instance of it since the object definition stays in the Project Library panel – we will elaborate on this concept in Section 5.1). Next, click on the Source object in the Standard Library, then drag and drop it into the Facility Window. Similarly, click, drag, and drop an instance of each of the Server and Sink objects onto the Facility Window. The next step is to connect the Source, Server, and Sink objects in our model. For this example, we’ll use the standard Connector object, to transfer entities between nodes in zero simulation time. To use this object, click on the Connector object in the Standard Library. After selecting the Connector, the cursor changes to a set of cross hairs. With the new cursor, click on the Output Node of the Source object (on its right side) and then click on the Input Node of the Server object (on its left side). This tells Simio that entities flow (instantly, i.e., in zero simulated time) out of the Source object and into the Server object. Follow the same process to add a connector from the Output Node of the Server object to the Input Node of the Sink object. Figure 4.7 shows the model with the connector in place between the Source and Server objects.

Note that placing the ModelEntity instance is not technically required since Simio will automatically add the entity instance if you do not manually add it to the model (this is why the ModelEntity object is in the Project Library rather than the Standard Library like the other objects that we are using here). However, we explicitly add the instance here for clarity of the idea that our simple model includes instances of ModelEntity, Source, Server, Sink, and Connector.

Figure 4.7: Model 4-1 with the Source and Server objects linked by a Connector object.

By the way, now would be a good time to save your model (“save early, save often,” is a good motto for every simulationist). We chose the name Model_04_01.spfx (spfx is the default file-name extension for Simio project files), following the naming convention for our example files given in Section 3.2; all our completed example files are available on the book’s website, as described in Appendix C.

Before we continue constructing our model, we need to mention that the Standard Library objects include several default queues. These queues are represented by the horizontal green lines in Figure 4.7. Simio uses queues where entities potentially wait — i.e., remain in the same logical place in the model for some period of simulated time. Note that, technically, tokens rather than entities wait in Simio queues, but we’ll discuss this issue in more detail in Chapter 5 and for now it’s easier to think of entities waiting in the queues since that is what you see in the animation. Model 4-1 includes the following queues:

Source1 OutputBuffer.Contents — Used to store entities waiting to move out of the Source object.

Server1 InputBuffer.Contents — Used to store entities waiting to enter the Server object.

Server1 Processing.Contents — Used to store entities currently being processed by the Server object.

Server1 OutputBuffer.Contents — Used to store entities waiting to exit the Server object.

Sink1 InputBuffer.Contents — Used to store entities waiting to enter the Sink object.

In our simple single-server queueing system in Figure 4.1, we show only a single queue and this queue corresponds to the InputBuffer.Contents queue for the Server1 object. The Processing.InProcess queue for the Server1 object stores the entity that’s being processed at any point in simulated time. The other queues in the Simio model are not used in our simple model (actually, the entities simply move through these queues instantly, in zero simulated time).

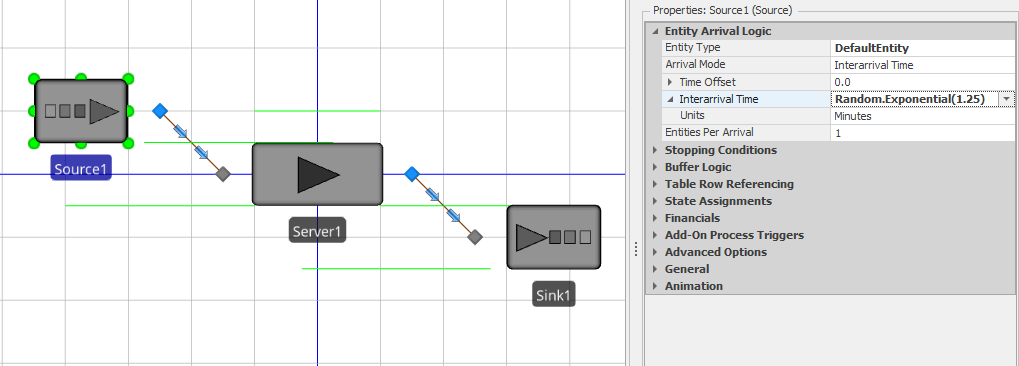

Now that the basic structure of the model is complete, we’ll add the model parameters to the objects. For our simple model, we need to specify probability distributions governing the interarrival times and service times for the arriving entities. The Source object creates arriving entities according to a specified arrival process. We’d like a Poisson arrival process at rate \(\lambda = 48\) entities per hour, so we’ll specify that the entity interarrival times are exponentially distributed with a mean of 1.25 minutes (a time between entities of \(= 60/48\) corresponds to a rate of 48/hour). In the formal Simio object model, the interarrival time is a property of the Source object. Object properties are set and edited in the Properties Window — select the Source object (click on the object) and the Properties Window will be displayed on the right panel (see Figure 4.8).

Figure 4.8: Setting the interarrival-time distribution for the Source object.

The Source object’s interarrival-time distribution is set by assigning the Interarrival Time property to Random.Exponential(1.25) and the Units property to Minutes; click on the arrow just to the left of Interarrival Time to expose the Units property and use the pull-down on the right to select Minutes. This tells Simio that each time an entity is created, it needs to sample a random value from an exponential distribution with mean \(1.25\), and to create the next entity that far into the future for an arrival rate of \(\lambda = 60 \times (1/1.25) = 48\) entities/hour, as desired. The random-variate functions available via the keyword Random are discussed further in Section 4.4. The Time Offset property (usually set to 0) determines when the initial entity is created. The other properties associated with the Arrival Logic can be left at their defaults for now. With these parameters, entities are created recursively for the duration of the simulation run.

The default object name (Source1, for the first source object), can be changed by either double-clicking on the name tag below the object with the object selected, or through the Name property in the General properties section. Or, like most items in Simio, you can rename by using the F2 key. Note that the General section also includes a Description property for the object, which can be quite useful for model documentation. You should get into the habit of including a meaningful description for each model object because whatever you enter there will be displayed in a tool tip popup note when you hover the mouse over that object.

In order to complete the queueing logic for our model, we need to set up the service process for the Server object. The Processing Time property of the Server module is used to specify the processing times for entities. This property should be set to Random.Exponential(1) with the Units property being Minutes. Make sure that you adjust the Processing Time property rather than the Process Type property (this should remain at its default value of Specific Time (the other options for processing type will be discussed in Section 10.4). The final step for our initial model is to tell Simio to run the model for 10 hours. To do this, click on the Run ribbon/tab, then in the Ending Type pull-down, select the Run Length option and enter 10 Hours. Before running our initial model, we’ll set the running speed for the model.

The Speed Factor is used to control the speed of the interactive execution of the model explicitly. Changing the Speed Factor to 50 (just type it into the Speed Factor field in the Run ribbon) will speed up the run to a speed that’s more visually appealing for this particular model. The optimal Speed Factor for an interactive run will depend on the model and object parameters and the individual preferences, as well as the speed of your computer, so you should definitely experiment with the Speed Factor for each model (technically, the Speed Factor is the amount of simulation time, in tenths of a second, between each animation frame).

At this point, we can actually run the model by clicking on the Run icon in the upper left of the ribbon. The model is now running in interactive mode. As the model runs, the simulated time is displayed in the footer section of the application, along with the percentage complete. Using the default Speed Factor, simulation time will advance fairly slowly, but this can be changed as the model runs. When the simulation time reaches 10 (the run length that we set), the model run will automatically pause.

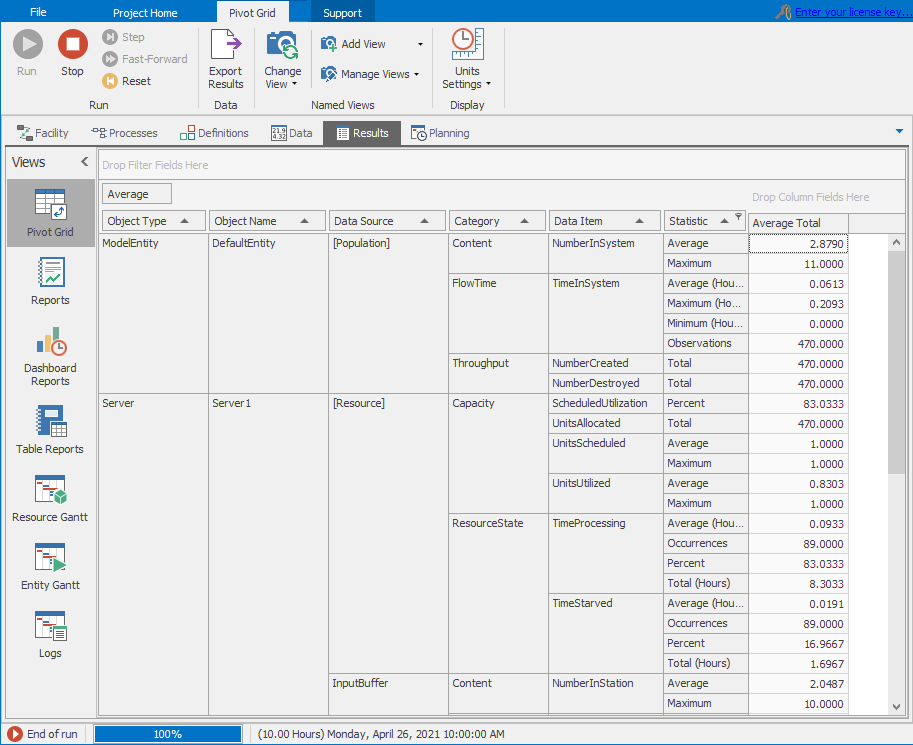

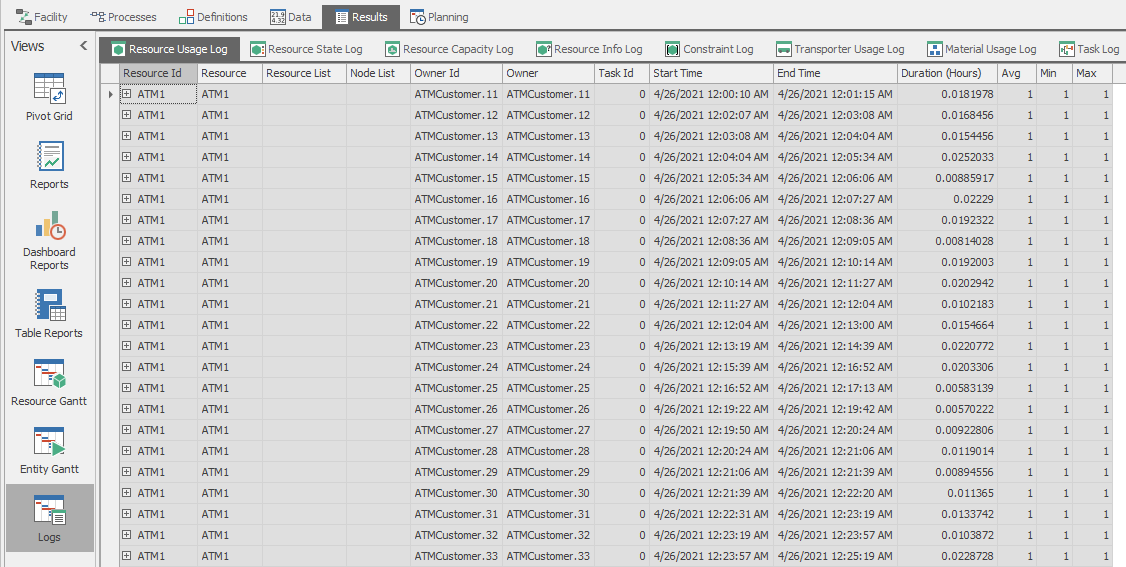

In Interactive Mode, the model results can be viewed at any time by stopping or pausing the model and clicking on the Results tab on the tab bar. Run the model until it reaches 10 hours and view the current results. Simio provides several different ways to view the basic model results. Pivot Grid, and Reports (the top two options are on the left panel — click on the corresponding icon to switch between the views) are the most common. Figure 4.9 shows the Pivot Grid for Model 4-1 paused at time 10 hours.

Figure 4.9: Pivot Grid report for the interactive run of Model 4-1.

Note that Simio uses an agile development process with frequent minor updates, and occasional major updates. It’s thus possible that the output values you get when you run our examples interactively may not always exactly match the output numbers we’re showing here, which we got as we wrote the book. This could, as noted at the end of Section 1.4, be due to small variations across releases in low-level behavior, such as the order in which simultaneous events are processed. Regardless of the reason for these differences, their existence just emphasizes the need to do proper statistical design and analysis of simulation experiments, and not just run it once to get “the answer,” a point that we’ll make repeatedly throughout this book. The Pivot Grid format is extremely flexible and provides a very quick method to find specific results. If you’re not accustomed to this type of report, it can look a bit overwhelming at first, but you’ll quickly learn to appreciate it as you begin to work with it. The Pivot Grid results can also be easily exported to a CSV (comma-separated values) text file, which can be imported into Excel and other applications. Each row in the default Pivot Grid includes an output value based on:

Object Type

Object Name

Data Source

Category

Data Item

Statistic

So, in Figure 4.9, the Average (Statistic) value for the TimeInSystem (Data Item) of the DefaultEntity (Object Name) of the ModelEntity type (Object Type) is \(0.0613\) hours (0.0613 hours \(\times\) 60 minutes/hour = 3.6780 minutes. Note that units for the Pivot Grid times, lengths, and rates can be set using the Time Units, Length Units, and Rate Units items in the Pivot Grid ribbon; if you switch to Minutes the Average TimeInSystem is 3.6753, so our hand-calculated value of 3.6780 minutes has a little round-off error in it. Further, the TimeInSystem data item belongs to the FlowTime Category and since the value is based on entities (dynamic objects), the Data Source is the set of Dynamic Objects.

If you’re looking at this on your computer (as you should be!), scrolling through the Pivot Grid reveals a lot of output performance measures even from a small model like this. For instance, just three rows below the Average TimeInSystem of 0.0613 hours, we see under the Throughput Category that a total of 470 entities were created (i.e., entered the model through the Source object), and in the next row that 470 entities were destroyed (i.e., exited the model through the Sink object). Though not always true, in this particular run of this model all of the 470 entities that arrived also exited during the 10 hours, so that at the end of the simulation there were no entities present. You can confirm this by looking at the animation when it’s paused at the 10-hour end time. (Change the run time to, say, 9 hours, and then 11 hours, to see that things don’t always end up this way, both by looking at the final animation as well as the NumberCreated and NumberDestroyed in the Throughput Category of the Pivot Grid). So in our 10-hour run, the output value of 0.0613 hours for average time in system is just the simple average of these 470 entities’ individual times in system.

While you’re playing around with the simulation run length, try changing it to 8 minutes and compare some of the Pivot Grid results with what we got from the manual simulation in Section 3.3.1 given in Figure 3.9. Now we can confess that those “magical” interarrival and service times for that manual simulation were generated in this Simio run, and we recorded them via the Model Trace capability.

The Pivot Grid supports three basic types of data manipulation:

Grouping: Dragging column headings to different relative locations will change the grouping of the data.

Sorting: Clicking on an individual column heading will cause the data to be sorted based on that column.

Filtering: Hovering the mouse over the upper right corner of a column heading will expose a funnel-shaped icon. Clicking on this icon will bring up a dialog that supports data filtering. If a filter is applied to any column, the funnel icon is displayed (no mouse hover required). Filtering the data allows you quickly to view the specific data in which you’re interested regardless of the amount of data included in the output.

Pivot Grids also allow the user to store multiple views of the filtered, sorted, and grouped Pivot Grids. Views can be quite useful if you are monitoring a specific set of performance metrics. The Simio documentation section on Pivot Grids includes much more detail about how to use these specific capabilities. The Pivot Grid format is extremely useful for finding information when the output includes many rows.

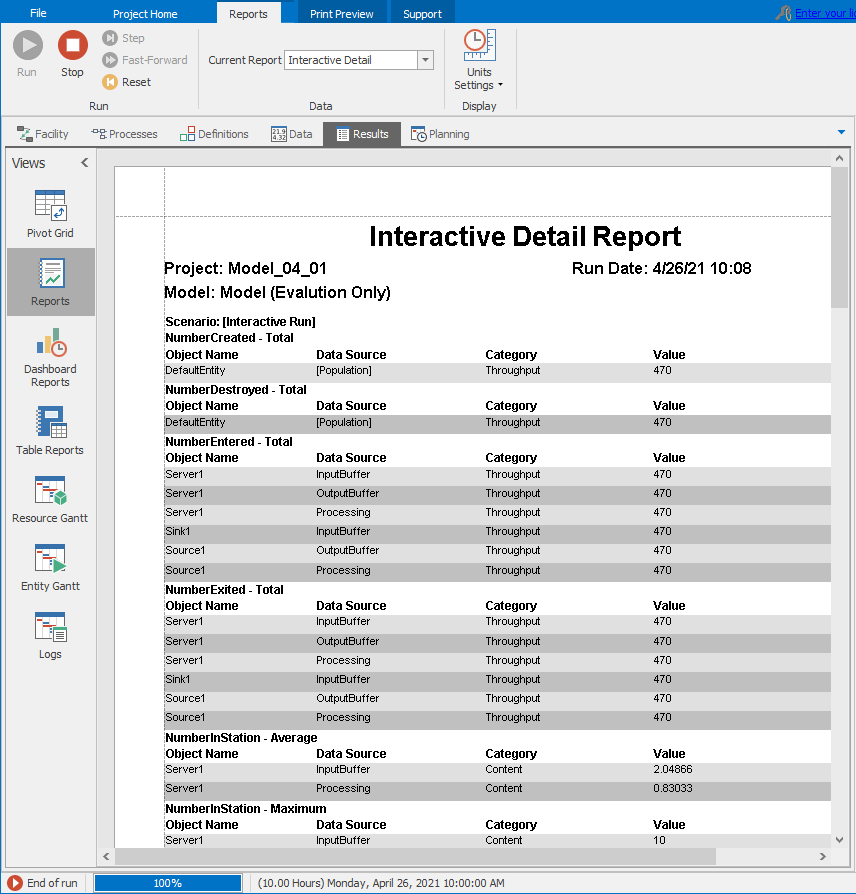

The Reports format gives the interactive run results in a formatted, detailed report format, suitable for printing, exporting to other file formats, or emailing (the formatting, printing, and exporting options are available from the Print Preview tab on the ribbon). Figure 4.10 shows the Reports format with the Print Preview tab open on the ribbon. Scrolling down to the TimeInSystem - Average (Hours) heading on the left will show a Value of 0.06126, the same (up to roundoff) as we saw for this output performance measure in the Pivot Grid in Figure 4.9.

Figure 4.10: Standard report view for Model 4-1.

4.2.2 Initial Experimentation and Analysis

Now that we have our first Simio model completed, we’ll do some initial, informal experimenting and analysis with it to understand the queueing system it models. As we mentioned earlier, the long-run, steady-state performance of our system can be determined analytically using queueing analysis (see Chapter 2 for details). Note that for any but the simplest models, this type of exact analysis will not be possible (this is why we use simulation, in fact). Table 4.2 gives the steady-state queueing results and the simulation results taken from the Pivot Table in Figure 4.9.

| Metric | Queueing | Model |

|---|---|---|

| Utilization (\(\rho\)) | \(0.800\) | \(0.830\) |

| Number in system (\(L\)) | \(4.000\) | \(2.879\) |

| Number in queue (\(L_q\)) | \(3.200\) | \(2.049\) |

| Time in system (\(W\)) | \(0.083\) | \(0.061\) |

| Time in queue (\(W_q\)) | \(0.067\) | \(0.044\) |

You’ll immediately notice that the numbers in the Queueing column are not equal to the numbers in the Model column, as we might expect. Before discussing the possible reasons for the differences, we first need to discuss one more important and sometimes-concerning issue. If you return to the Facility Window (click on the Facility tab just below the ribbon), reset the model (click on the Reset icon in the Run ribbon), re-run the model, allow it to run until it pauses at time 10 hours, and view the Pivot Grid, you’ll notice that the results are identical to those from the previous run (displayed in Figure 4.9). If you repeat the process again and again, you’ll always get the same output values. To most people new to simulation, and as mentioned in Section 3.1.3, this seems a bit odd given that we’re supposed to be using random values for the entity interarrival and service times in the model. This illustrates the following critical points about computer simulation:

The random numbers used are not truly random in the sense of being unpredictable, as mentioned in Section 3.1.3 and discussed in Section 6.3 — instead they are pseudo-random, which, in our context, means that the precise sequence of generated numbers is deterministic (among other things).

Through the random-variate-generation process discussed in Section 6.4, some simulation software can control the pseudo-random number generation and we can exploit this control to our advantage.

The concept that the “supposedly random numbers” are actually predictable can initially cause great angst for new simulationists (that’s what you’re now becoming). However, for simulation, this predictability is a good thing. Not only does it make grading simulation homework easier (important to the authors), but (more seriously) it’s also useful during model debugging. For example, when you make a change in the model that should have a predictable effect on the simulation output, it’s very convenient to be able to use the same “random” inputs for the same purposes in the simulation, so that any changes (or lack thereof) in output can be directly attributable to the model changes, rather than to different random numbers. As you get further into modeling, you’ll find yourself spending significant time debugging your models so this behavior will prove useful to you (see Section 4.9 for detailed coverage of the debugging process and Simio’s debugging tools). In addition, this predictability can be used to reduce the required simulation run time through a variety of techniques called variance reduction, which are discussed in general simulation texts (such as (Banks et al. 2005) or (Law 2015)). Simio’s default behavior is to use the same sequence of random variates (draws or observations on model-driving inputs like interarrival and service times) each time a model is run. As a result, running, resetting, and re-running a model will yield identical results unless the model is explicitly coded to behave otherwise.

Now we can return to the question of why our initial simulation results are not equal to our queueing results in Table 4.2. There are three possible explanations for this mismatch:

Our Simio model is wrong, i.e., we have an error somewhere in the model itself.

Our expectation is wrong, i.e., our assumption that the simulation results should match the queueing results is wrong.

Sampling error, i.e., the simulation model results match the expectation in a probabilistic sense, but we either haven’t run the model long enough, or for enough replications (separate independent runs starting from the same state but using separate random numbers), or are interpreting the results incorrectly.

In fact, if the results are not equal when comparing simulation results to our expectation, it’s always one or more of these possibilities, regardless of the model. In our case, we’ll see that our expectation is wrong, and that we have not run the model long enough. Remember, the queueing-theory results are for long-run steady-state, i.e., after the system/model has run for an essentially infinite amount of time. But we ran for only 10 hours, which for this model is evidently not sufficiently close to infinity. Nor have we made enough replications (items 2 and 3 from above). Developing expectations, comparing the expectations to the simulation-model results, and iterating until these converge is a very important component of model verification and validation (we’ll return to this topic in Section 4.2.5).

4.2.3 Replications and Statistical Analysis of Output

As just suggested, a replication is a run of the model with a fixed set of starting and ending conditions using a specific and separate, non-overlapping sequence of input random numbers and random variates (the exponentially distributed interarrival and service times in our case). For the time being, assume that the starting and ending conditions are dictated by the starting and ending simulation time (although as we’ll see later, there are other possible kinds of starting and ending conditions). So, starting our model empty and idle, and running it for 10 hours, constitutes a replication. Resetting and re-running the model constitutes running the same replication again, using the same input random numbers and thus random variates, so obviously yielding the same results (as demonstrated above). In order to run a different replication, we need a different, separate, non-overlapping set of input random numbers and random variates. Fortunately, Simio handles this process for us transparently, but we can’t run multiple replications in Interactive mode. Instead, we have to create and run a Simio Experiment.

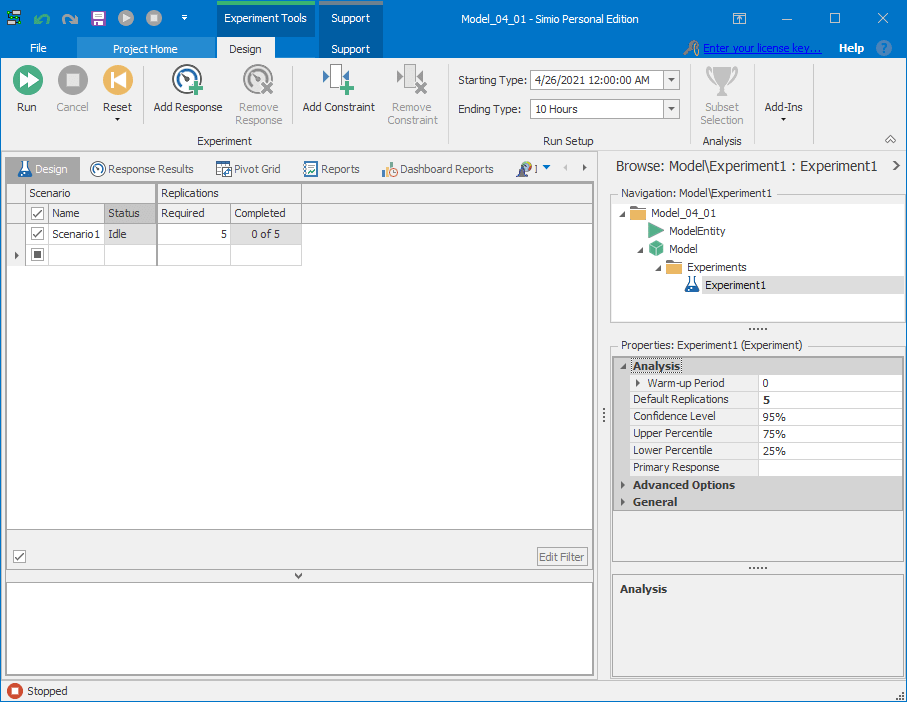

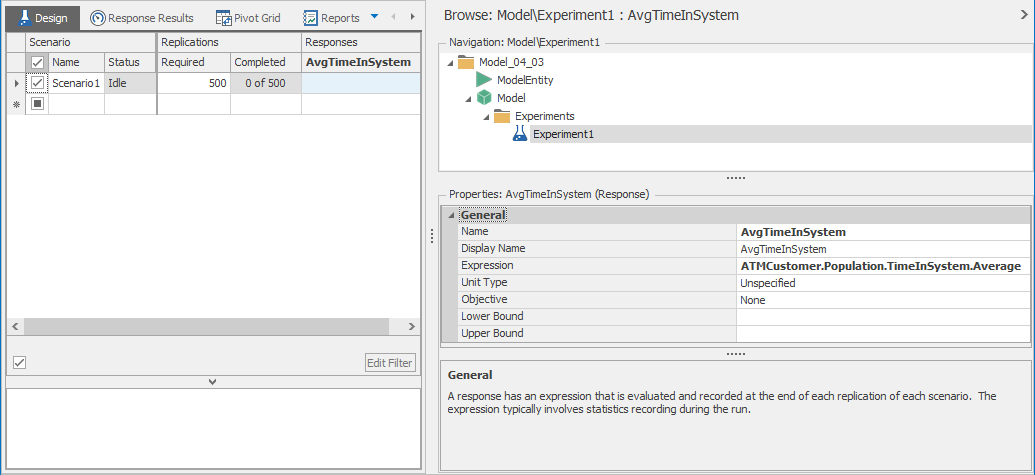

Simio Experiments allow us to run our model for a user-specified number of replications, where Simio guarantees that the generated random variates are such that the replications are statistically independent from one another, since the underlying random numbers do not overlap from one replication to the next. This guarantee of independence is critical for the required statistical analysis we’ll do. To set up an experiment, go to the Project Home ribbon and click on the New Experiment icon. Simio will create a new experiment and switch to the Experiment Design view as shown in Figure 4.11 after we changed both Replications Required near the top, and Default Replications on the right, to 5 from their default values of 10.

Figure 4.11: Initial experiment design for running five replications of a model.

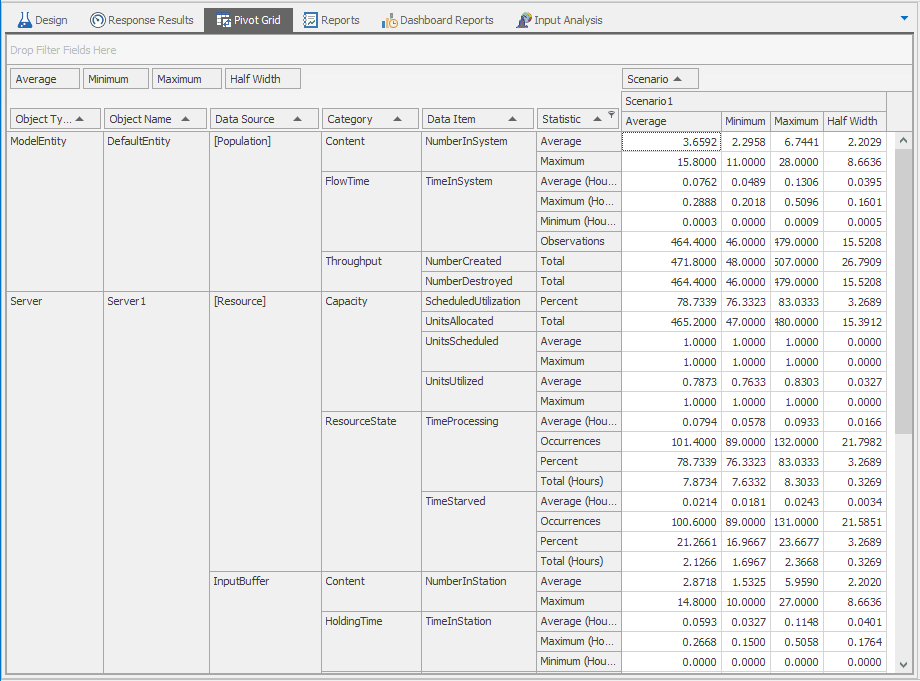

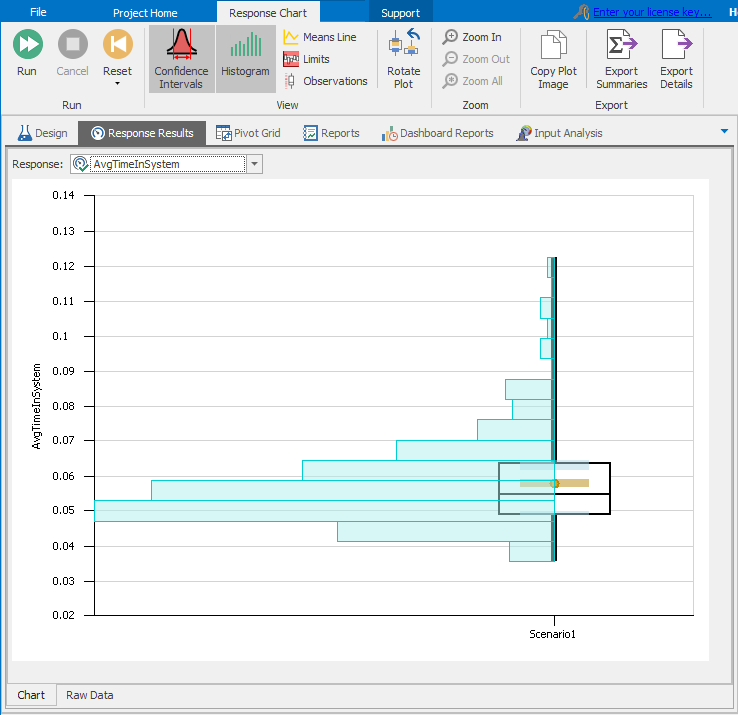

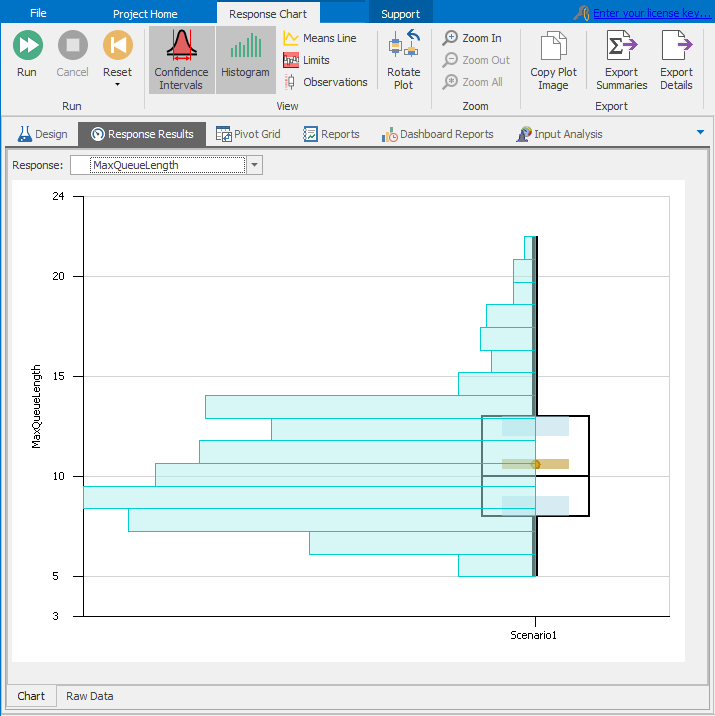

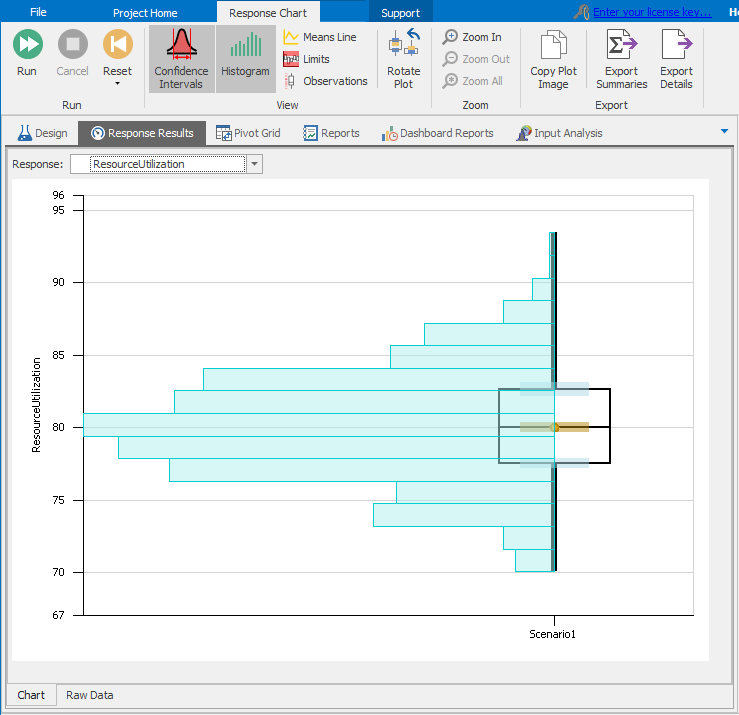

To run the experiment, select the row corresponding to Scenario1 (the default name) and click the Run icon (the one with two white right arrows in it in the Experiment window, not the one with one right arrow in it in the Model window). After Simio runs the five replications, select the Pivot Grid report (shown in Figure 4.12).

Figure 4.12: Experiment Pivot Grid for the five replications of the model.

Compared to the Pivot Grid we saw while running in Interactive Mode (Figure 4.9), we see the additional results columns for Minimum, Maximum, and Half Width (of 95% confidence intervals on the expected value, with the Confidence Level being editable in the Experiment Design Properties), reflecting the fact that we now have five independent observations of each output statistic.

To understand what these cross-replication output statistics are, focus on the entity TimeInSystem values in rows 3-5. For example:

The 0.0762 for the Average of Average (Hours) TimeInSystem (yes, we meant to say “Average” twice there) is the average of five numbers, each of which is a within-replication average time in system (and the first of those five numbers is 0.0613 from the single-replication Pivot Grid in Figure 4.9. The 95% confidence interval \(0.0762 \pm 0.0395\), or \([0.0367, 0.1157]\) (in hours), contains, with 95% confidence , the expected within-replication average time in system, which you can think of as the result of making an infinite number of replications (not just five) of this model, each of duration 10 hours, and averaging all those within-replication average times in system. Another interpretation of what this confidence interval covers is the expected value of the probability distribution of the simulation output random variable representing the within-replication average time in system. (More discussion of output confidence intervals appears below in the discussion of Table 4.4.)

Still in the Average (Hours) row, 0.1306 is the maximum of these five average within-replication times in system, instead of their average. In other words, across the five replications, the largest of the five Average TimeInSystem values was 0.1306, so it is the maximum average.

Average maximum, anyone? In the next row down for Maximum (Hours), the 0.2888 on the left is the average of five numbers, each of which is the maximum individual-entity time in system within that replication. And the 95% confidence interval \(0.2888 \pm 0.1601\) is trying to cover the expected maximum time in system, i.e., the maximum time in system averaged over an infinite number of replications rather than just five.

Maybe more meaningful as a really bad worst-case time in system, though, would be the 0.5096 hour, being the maximum of the five within-replication maximum times in system.

Table 4.3 gives the queueing metrics for each of the five replications of the model, as well a the sample mean (Avg) and sample standard deviation (StDev) across the five replications for each metric. To access these individual-replication output values, click on the Export Details icon in the Pivot Grid ribbon; click Export Summaries to get cross-replication results like means and standard deviations, as shown in the Pivot Grid itself. The exported data file is in CSV format, which can be read by a variety of applications, such as Excel. The first thing to notice in this table is that the values can vary significantly between replications (\(L\) and \(L_q\), in particular). This variation is specifically why we cannot draw inferences from the results of a single replication.

| Metric being estimated | 1 | 2 | 3 | 4 | 5 | Avg | StDev |

|---|---|---|---|---|---|---|---|

| Utilization (\(\rho\)) | \(0.830\) | \(0.763\) | \(0.789\) | \(0.769\) | \(0.785\) | \(0.787\) | \(0.026\) |

| Number in system (\(L\)) | \(2.879\) | \(2.296\) | \(3.477\) | \(2.900\) | \(6.744\) | \(3.659\) | \(1.774\) |

| Number in queue (\(L_q\)) | \(2.049\) | \(1.532\) | \(2.688\) | \(2.131\) | \(5.959\) | \(2.872\) | \(1.774\) |

| Time in system (\(W\)) | \(0.061\) | \(0.049\) | \(0.075\) | \(0.065\) | \(0.131\) | \(0.076\) | \(0.032\) |

| Time in queue (\(W_q\)) | \(0.044\) | \(0.033\) | \(0.058\) | \(0.048\) | \(0.115\) | \(0.059\) | \(0.032\) |

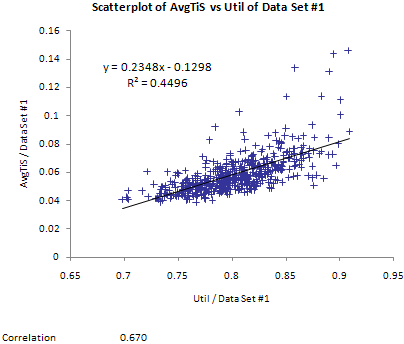

Since our model inputs (entity interarrival and service times) are random, the simulation-output performance metrics (simulation-based estimates of \(\rho\), \(L\), \(L_q\), \(W\), and \(W_q\), which we could respectively denote as \(\widehat{\rho}\), \(\widehat{L}\), \(\widehat{L_q}\), \(\widehat{W}\), and \(\widehat{W_q}\)) are random variables . The queueing analysis gives us the exact steady-state values of \(\rho\), \(L\), \(L_q\), \(W\), and \(W_q\). Based on how we run replications (the same model, but with separate independent input random variates), each replication generates one observation on each of \(\widehat{\rho}\), \(\widehat{L}\), \(\widehat{L_q}\), \(\widehat{W}\), and \(\widehat{W_q}\). In statistical terms, running \(n\) replications yields \(n\) independent, identically distributed (IID) observations of each random variable. This allows us to estimate the mean values of the random variables using the sample averages across replications. So, the values in the Average column from Table 4.3 are estimates of the corresponding random-variable expected values. What we don’t know from this table is how good our estimates are. We do know, however, that as we increase the number of replications, our estimates get better, since the sample mean is a consistent estimator (its own variance decreases with \(n\)), and from the strong law of large numbers (as \(n \rightarrow \infty\), the sample mean across replications \(\rightarrow\) the expected value of the respective random variable, with probability 1).

Table 4.4 compares the results from running five replications with those from running 50 replications. Since we ran more replications of our model, we expect the estimates to be better, but averages still don’t give us any specific information about the quality (or precision) of these estimates. What we need is an interval estimate that will give us insight about the sampling error (the averages are merely point estimates). The \(h\) columns give such an interval estimate. These columns give the half-widths of 95% confidence intervals on the means constructed from the usual normal-distribution approach (using the sample standard deviation and student’s \(t\) distribution with \(n-1\) degrees of freedom, as given in any beginning statistics text). Consider the 95% confidence intervals for \(L\) based on five and 50 replications: \[\begin{align*} 5\ \textrm{replications}: 3.659 \pm 2.203\ \textrm{or}\ [1.456, 5.862] \\ 50\ \textrm{replications}: 3.794 \pm 0.433\ \textrm{or}\ [3.361, 4.227] \end{align*}\]

| Metric being estimated | Avg | \(h\) | Avg | \(h\) |

|---|---|---|---|---|

| Utilization (\(\rho\)) | \(0.787\) | \(0.033\) | \(0.789\) | \(0.014\) |

| Number in system (\(L\)) | \(3.659\) | \(2.203\) | \(3.794\) | \(0.433\) |

| Number in queue (\(L_q\)) | \(2.872\) | \(2.202\) | \(3.004\) | \(0.422\) |

| Time in system (\(W\)) | \(0.076\) | \(0.040\) | \(0.078\) | \(0.008\) |

| Time in queue (\(W_q\)) | \(0.059\) | \(0.040\) | \(0.062\) | \(0.008\) |

Based on five replications, we’re 95% confident that the true mean (expected value or population mean) of \(\widehat{L}\) is between 1.456 and 5.862, while based on 50 replications, we’re 95% confident that the true mean is between 3.361 and 4.227. (Strictly speaking, the interpretation is that 95% of confidence intervals formed in this way, from replicating, will cover the unknown true mean.) So the confidence interval on the mean of an output statistic provides us a measure of the sampling error and, hence, the quality (precision) of our estimate of the true mean of the random variable. By increasing the number of replications (samples), we can make the half-width increasingly small. For example, running 250 replications results in a CI of \([3.788, 4.165]\) — clearly we’re more comfortable with our estimate of the mean based on 250 replications than we are based five replications. In cases where we make independent replications, the confidence-interval half-widths therefore give us guidance as to how many replications we should run if we’re interested in getting a precise estimate of the true mean; due to the \(\sqrt{n}\) in the denominator of the formula for the confidence-interval half width, we need to make about four times as many replications to cut the confidence interval half-width in half, compared to its current size from an initial number of replications, and about 100 times as many replications to make the interval \(1/10\) its current size. Unfortunately, there is no specific rule about “how close is close enough” — i.e., what values of \(h\) are acceptably small for a given simulation model and decision situation. This is a judgment call that must be made by the analyst or client in the context of the project. There is a clear trade-off between computer run time and reducing sampling error. As we mentioned above, we can make \(h\) increasingly small by running enough replications, but the cost is computer run time. When deciding if more replications are warranted, two issues are important:

What’s the cost if I make an incorrect decision due to sampling error?

Do I have time to run more replications?

So, the first answer as to why our simulation results shown in Table 4.2 don’t match the queueing results is that we were using the results from a singe replication of our model. This is akin to rolling a die, observing a 4 (or any other single value) and declaring that value to be the expected value over a large number of rolls. Clearly this would be a poor estimate, regardless of the individual roll. Unfortunately, using results from a single replication is quite common for new simulationists, despite the significant risk. Our general approach going forward will be to run multiple replications and to use the sample averages as estimates of the means of the output statistics, and to use the 95% confidence-interval half-widths to help determine the appropriate number of replications if we’re interested in estimating the true mean. So, instead of simply using the averages (point estimates), we’ll also use the confidence intervals (interval estimates) when analyzing results. The standard Simio Pivot Grid report for experiments (see Figure 4.12) automatically supports this approach by providing the sample average and 95% confidence-interval half-widths for all output statistics.

The second reason for the mismatch between our expectations and the model results is a bit more subtle and involves the need for a warm up period for our model. We will discuss that in the next section.

4.2.4 Steady-State vs. Terminating Simulations

Generally, when we start running a simulation model that includes queueing or queueing-network components, the model starts in a state called empty and idle, meaning that there are no entities in the system and all servers are idle. Consider our simple single-server queueing model. The first entity that arrives will never have to wait for the server. Similarly, the second arriving entity will likely spend less time in the queue (on average) than the 100th arriving entity (since the only possible entity in front of the second entity will be the first entity). Depending on the characteristics of the system being modeled (the expected server utilization, in our case), the distribution and expected value of queue times for the third, fourth, fifth, etc. entities can be significantly different from the distribution and expected value of queue times at steady state, i.e., after a long time that is sufficient for the effects of the empty-and-idle initial conditions to have effectively worn off. The time between the start of the run and the point at which the model is determined to have reached steady state (another one of those judgment calls) is called the initial transient period, which we’ll now discuss.

The basic queueing analysis that we used to get the results in Table 4.2 (see Chapter 2) provides exact expected-value results for systems at steady state. As discussed above, most simulation models involving queueing networks go through an initial-transient period before effectively reaching steady state. Recording model statistics during the initial-transient period and then using these observations in the replication summary statistics tabulation can lead to startup bias, i.e., \(E(\widehat{L})\) may not be equal to \(L\). As an example, we ran four experiments where we set the run length for our model to be 2, 5, 10, 20, and 30 hours and ran 500 replications each. The resulting estimates of \(L\) (along with the 95% confidence intervals, of course) were:

\[\begin{align*} 2\ \textrm{hours}: 3.232 \pm 0.168\ \textrm{or}\ [3.064, 3.400] \\ 5\ \textrm{hours}: 3.622 \pm 0.170\ \textrm{or}\ [3.622, 3.962] \\ 10\ \textrm{hours}: 3.864 \pm 0.130\ \textrm{or}\ [3.734, 3.994] \\ 20\ \textrm{hours}: 3.888 \pm 0.096\ \textrm{or}\ [3.792, 3.984] \\ 30\ \textrm{hours}: 3.926 \pm 0.080\ \textrm{or}\ [3.846, 4.006] \end{align*}\]

For the 2, 5, 10, and 20 hour runs, it seems fairly certain that the estimates are still biased downwards with respect to steady state (the steady-state value is \(L=4.000\)). At 30 hours, the mean is still a little low, but the confidence interval covers 4.000, so we’re not sure. Running more replications would likely reduce the width of the confidence interval and \(4.000\) may be outside it so that we’d conclude that the bias is still significant with a 30-hour run, but we’re still not sure. It’s also possible that running additional replications wouldn’t provide the evidence that the startup bias is significant — such is the nature of statistical sampling. Luckily, unlike many scientific and sociological experiments, we’re in total control of the replications and run length and can experiment until we’re satisfied (or until we run out of either computer time or human patience). Before continuing we must point out that you can’t “replicate away” startup bias. The transient period is a characteristic of the system and isn’t an artifact of randomness and the resulting sampling error.

Instead of running the model long enough to wash out the startup bias through sheer arithmetic within each run, we can use a warm-up period. Here, the model run period is divided so that statistics are not collected during the initial (warm-up) period, though the model is running as usual during this period. After the warm-up period, statistics are collected as usual. The idea is that the model will be in a state close to steady-state when we start recording statistics if we’ve chosen the warm-up period appropriately, something that may not be especially easy in practice. So, for our simple model, the expected number of entities in the queue when the first entity arrives after the warm-up period would be \(3.2\) (\(L_q=3.2\) at steady state). As an example, we ran three additional experiments where we set the run lengths and warm-up periods to be \((20, 10)\), \((30, 10)\), and \((30, 20)\), respectively (in Simio, this is done by setting the Warm-up Period property for the Experiment to the length of the desired warm-up period). The results when estimating \(L = 4.000\) are:

\[\begin{align*} \textrm{(Run length, warm-up)} = (20, 10): 4.033 \pm 0.155\ \textrm{or}\ [3.978, 4.188] \\ \textrm{(Run length, warm-up)} = (30, 10): 4.052 \pm 0.103\ \textrm{or}\ [3.949, 4.155] \\ \textrm{(Run length, warm-up)} = (30, 20): 3.992 \pm 0.120\ \textrm{or}\ [3.872, 4.112] \end{align*}\]

It seems that the warm-up period has helped reduce or eliminate the startup bias in all cases and we have not increased the overall run time beyond 30 hours. So, we have improved our estimates without increasing the computational requirements by using the warm-up period. At this point, the natural question is “How long should the warm-up period be?” In general, it’s not at all easy to determine even approximately when a model reaches steady state. One heuristic but direct approach is to insert dynamic animated Status Plots in the Simio model’s Facility Window (in the Model’s Facility Window, select the Animation ribbon under Facility Tools — see Chapter 8 for animation details) and just make a judgment about when they appear to stop trending systematically; however, these can be quite noisy” (i.e., variable) since they depict only one replication at a time during the animation. We’ll simply observe the following about specifying warm-up periods:

If the warm-up period is too short, the results will still have startup bias (this is potentially bad); and

If the warm-up period is too long, our sampling error will be higher than necessary (as we increase the warm-up period length, we decrease the amount of data that we actually record).

As a result, the “safest” approach is to make the warm-up period long and increase the overall run length and number of replications in order to achieve acceptable levels of sampling error (measured by the half-widths of the confidence intervals). Using this method we may expend a bit more computer time than is absolutely necessary, but computer time is cheap these days (and bias is insidiously dangerous since in practice you can’t measure it)!

Of course, the discussion of warm-up in the previous paragraphs assumes that you actually want steady-state values; but maybe you don’t. It’s certainly possible (and common) that you’re instead interested in the “short-run” system behavior during the transient period; for example, same-day ticket sales for a sporting event open up (with an empty and idle system) and stop at certain pre-determined times, so there is no steady state at all of any relevance. In these cases, often called terminating simulations, we simply ignore the warm-up period in the experimentation (i.e., default it to 0), and what the simulation produces will be an unbiased view of the system’s behavior during the time period of interest, and relative to the initial conditions in the model.

The choice of whether the steady-state goal or the terminating goal is appropriate is usually a matter of what your study’s intent is, rather than a matter of what the model structure may be. We will say, though, that terminating simulations are much easier to set up, run, and analyze, since the starting and stopping rules for each replication are just part of the model itself, and not up to analysts’ judgment; the only real issue is how many replications you need to make in order to achieve acceptable statistical precision in your results.

4.2.5 Model Verification

Now that we’ve addressed the replications issue, and possible warm-up period if we want to estimate steady-state behavior, we’ll revisit our original comparison of the queueing analysis results to our updated simulation model results (500 replications of the model with a 30-hour run length and 20-hour warm-up period). Table 4.5 gives both sets of results. As compared to the results shown in Table 4.2, we’re much more confident that our model is “right.” In other words, we have fairly strong evidence that our model is verified (i.e., that it behaves as we expect it to). Note that it’s not possible to provably verify a model. Instead, we can only collect evidence until we either find errors or are convinced that the model is correct.

| Metric being estimated | Queueing | Simulation |

|---|---|---|

| Utilization (\(\rho\)) | \(0.800\) | \(0.800 \pm 0.004\) |

| Number in system (\(L\)) | \(4.000\) | \(4.001 \pm 0.133\) |

| Number in queue (\(L_q\)) | \(3.200\) | \(3.201 \pm 0.130\) |

| Time in system (\(W\)) | \(0.083\) | \(0.083 \pm 0.003\) |

| Time in queue (\(W_q\)) | \(0.067\) | \(0.066 \pm 0.003\) |

Recapping the process that we went through:

We developed a set of expectations about our model results (the queueing analysis).

We developed and ran the model and compared the model results to our expectations (Table 4.2).

Since the results didn’t match, we considered the three possible explanations:

Our Simio model is wrong (i.e., we have an error somewhere in the model itself) — we skipped over this one.

Our expectation is wrong (i.e., our assumption that the simulation results should match the queueing results is wrong) — we found that we needed to warm up the model to get it close to steady state in order effectively to eliminate the startup bias (i.e., our expectation that our analysis including the transient period should match the steady-state results was wrong). Adding a warm-up period corrected for this.

Sampling error (i.e., the simulation-model results match the expectation in a probabilistic sense, but we either haven’t run the model long enough or are interpreting the results incorrectly) — we found that we needed to replicate the model and increase the run length to account appropriately for the randomness in the model outputs.

We finally settled on a model that we feel is correct.

It’s a good idea to try to follow this basic verification process for all simulation projects. Although we’ll generally not be able to compute the exact results that we’re looking for (otherwise, why would we need simulation?), we can always develop some expectations, even if they’re based on an abstract version of the system being modeled. We can then use these expectations and the process outlined above to converge to a model (and set of expectations) about which we’re highly confident.

Now that we’ve covered the basics of model verification and experimentation in Simio, we’ll switch gears and discuss some additional Simio modeling concepts for the remainder of this chapter. However, we’ll definitely revisit these basic issues throughout the book.

4.3 Model 4-2: First Model Using Processes

Although modeling strictly with high-level Simio objects (such as those from the Standard Library) is fast, intuitive, and (almost) easy for most people, there are often situations where you’ll want to use the lower-level Simio Processes. You may want to construct your model or augment existing Simio objects, either to do more detailed or specialized modeling not accommodated with objects, or improve execution speed if that’s a problem. Using Simio Processes requires a fairly detailed understanding of Simio and discrete-event simulation methodology, in general. This section will only demonstrate a simple, but fundamental Simio Process model of our example single-server queueing system. In the following chapters, we’ll go into much more detail about Simio Processes where called for by the modeling situation.

In order to model systems that include finite-capacity resources for which entities compete for service (such as the server in our simple queueing system), Simio uses a Seize-Delay-Release model. This is a standard discrete-event-simulation approach and many other simulation tools use the same or a similar model. Complete understanding of this basic model is essential in order to use Simio Processes effectively. The model works as follows:

Define a resource with capacity \(c\). This means that the resource has up to \(c\) arbitrary units of capacity that can be simultaneously allocated to one or more entities at any point in simulated time.

When an entity requires service from the resource, the entity seizes some number \(s\) of units of capacity from the resource.

At that point, if the resource has \(s\) units of capacity not currently allocated to other entities, \(s\) units of capacity are immediately allocated to the entity and the entity begins a delay representing the service time, during which the \(s\) units remain allocated to the entity. Otherwise, the entity is automatically placed in a queue where it waits until the required capacity is available.

When an entity’s service-time delay is complete, the entity releases the \(s\) units of capacity of the resource and continues to the next step in its process. If there are entities waiting in the resource queue and the resource’s available capacity (including the units freed by the just-departed entity) is sufficient for one of the waiting entities, the first such entity is removed from the queue, the required units of capacity are immediately allocated to that entity, and that entity begins its delay.

From the modeling perspective, each entity simply goes through the Seize-Delay-Release logic and the simulation tool manages the entity’s queueing and allocation of resource capacity to the entities. In addition, most simulation software, including Simio, automatically records queue, resource, and entity-related statistics as the model runs. Figure 4.13 shows the basic seize-delay-release process. In this figure, the “Interarrival time” is the time between successive entities and the “Processing time” is the time that an entity is delayed for processing. The Number In System tracks the number of entities in the system at any point in simulated time and the marking and recording of the arrival time and time in system tracks the times that all entities spend in the system.

Figure 4.13: Basic process for the seize-delay-release model.

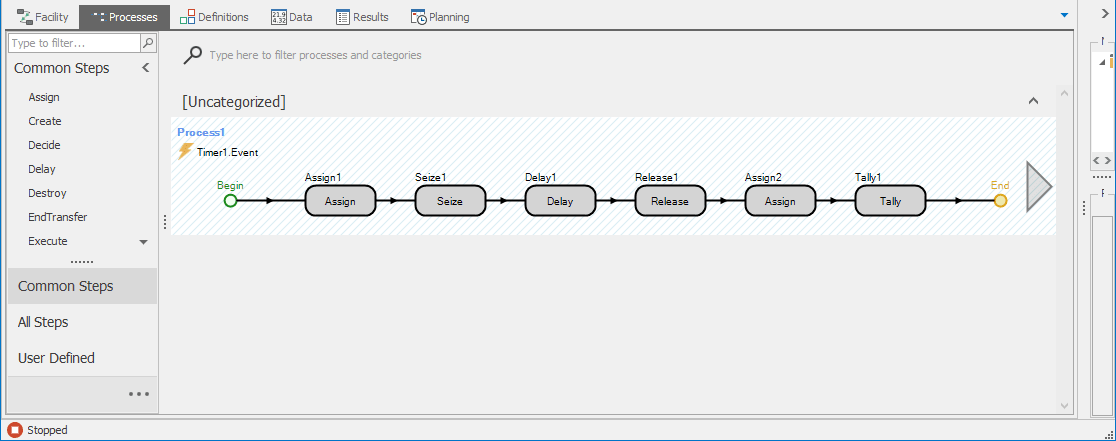

For our single-server queueing model, we simply set \(c=1\) and \(s=1\) (for all entities). So our single-server model is just an implementation of the basic seize-delay-release logic illustrated in Figure 4.13. Creating this model using processes is a little bit more involved than it was using Standard Library Objects, but it’s instructive to go through the model development and see the mechanisms for collecting user-defined statistics. Figure 4.14 shows the completed Simio process.

Figure 4.14: Process view of Model 4-2.

The steps to implement this model in Simio (see ) are as follows:

Open Simio and create a new model.

Create a Resource object in the Facility Window by dragging a

Resourceobject from the Standard Library onto the Facility Window. In the Process Logic section of the object’s properties, verify that the Initial Capacity Type isFixedand that the Capacity is1(these are the defaults). Note the object Name in the General section (the default is Resource1).Make sure that the Model is highlighted in the Navigation Window and switch to the Definitions Window by clicking on the

Definitionstab and choose theStatessection by clicking on the corresponding panel icon on the left. This prepares us to add a state to the model.Create a new discrete (integer) state by clicking on the

Integericon in the States ribbon. Change the default Name property of IntegerState1 for the state toWIP. Discrete States are used to record numerical values. In this case, we’re creating a place to store the current number of entities in the model by creating an Integer Discrete State for the model (the Number In System from Figure 4.13).Switch to the

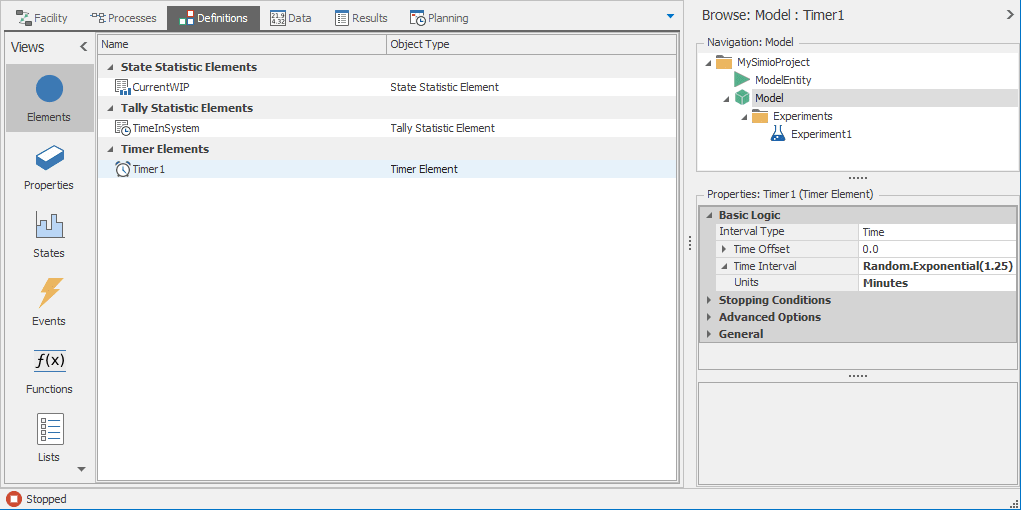

Elementssection by clicking on the panel icon and create a Timer element by clicking on theTimericon in the Elements ribbon (see Figure 4.15). The Timer element will be used to trigger entity arrivals (the loop-back arc in Figure 4.13). In order to have Poisson arrivals at the rate of \(\lambda=48\) entities/hour, or equivalently exponential interarrivals with mean \(1/0.8 = 1.25\) minutes, set the Time Interval property toRandom.Exponential(1.25)and make sure that the Units are set toMinutes.

Figure 4.15: Timer element for the Model 4-2.

Create a State Statistic by clicking on the

State Statisticicon in the Statistics section of the Elements ribbon. Set the State Variable Name property toWIP(the previously defined model Discrete State so it appears on the pull-down there) and set the Name property toCurrentWIP. We’re telling Simio to track the value of the state over time and record a time-dependent statistic on this value.Create a Tally Statistic by clicking on the

Tally Statisticicon in the Statistics section of the Elements ribbon. Set the Name property toTimeInSystemand set the Unit Type property toTime. Tally Statistics are used to record observational (i.e., discrete-time) statistics.Switch to the Process Window by clicking on the

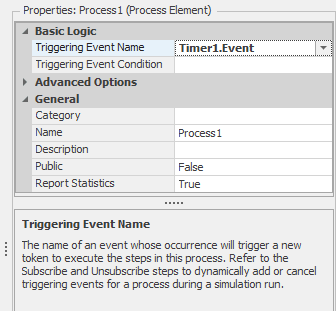

Processestab and create a new Process by clicking on theCreate Processicon in the Process ribbon.Set the Triggering Event property to be the newly created timer event (see Figure 4.16). This tells Simio to execute the process whenever the timer goes off.

Figure 4.16: Setting the triggering event for the process.

Add an Assign step by dragging the

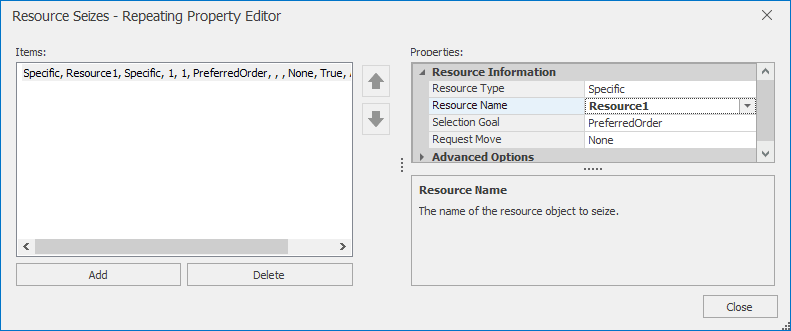

Assignstep from the Common Steps panel to the process, placing the step just to the right of the Begin indicator in the process. Set the State Variable Name property toWIPand the New Value property toWIP + 1, indicating that when the event occurs, we want to increment the value of the state variable to reflect the fact that an entity has arrived to the system (the “Increment” in Figure 4.13).Next, add the Seize step to the process just to the right of the Assign step. To indicate that Resource1 should be seized by the arriving entity, click the

...button on the right and select the Seizes property in the Basic Logic section, clickAdd, and then indicate that the specific resourceResource1should be seized (see Figure 4.17).

Figure 4.17: Setting the Seize properties to indicate that Resource1 should be seized.

Add the

Delaystep immediately after the Seize step and set the Delay Time property toRandom.Exponential(1)minutes to indicate that the entity delays should be exponentially distributed with mean \(1\) minute (equivalent to the original service rate of 60/hour).Add the

Releasestep immediately after the Delay step and set the Releases property toResource1.Add another

Assignstep next to the Release step and set the State Variable Name property toWIPand the New Value property toWIP - 1, indicating that when the entity releases the resource, we want to decrement the value of the state variable to reflect the fact that an entity has left the system.Add a

Tallystep and set the TallyStatisticName property toTimeInSystem(the Tally Statistic was created earlier so is available on the pull-down there), and set the Value property toTimeNow - Token.TimeCreatedto indicate that the recorded value should be the current simulation time minus the time that the current token was created. This time interval represents the time that the current entity spent in the system. The Tally step implements the Record function shown in Figure 4.13. Note that we used the token stateToken.TimeCreatedinstead of marking the arrival time as shown in Figure 4.13.Finally, switch back to the Facility Window and set the run parameters (e.g., set the Ending Type to a

Fixed run length of 1000 hours).

Note that we’ll discuss the details of States, Properties, Tokens, and other components of the Simio Framework in Chapter 5.

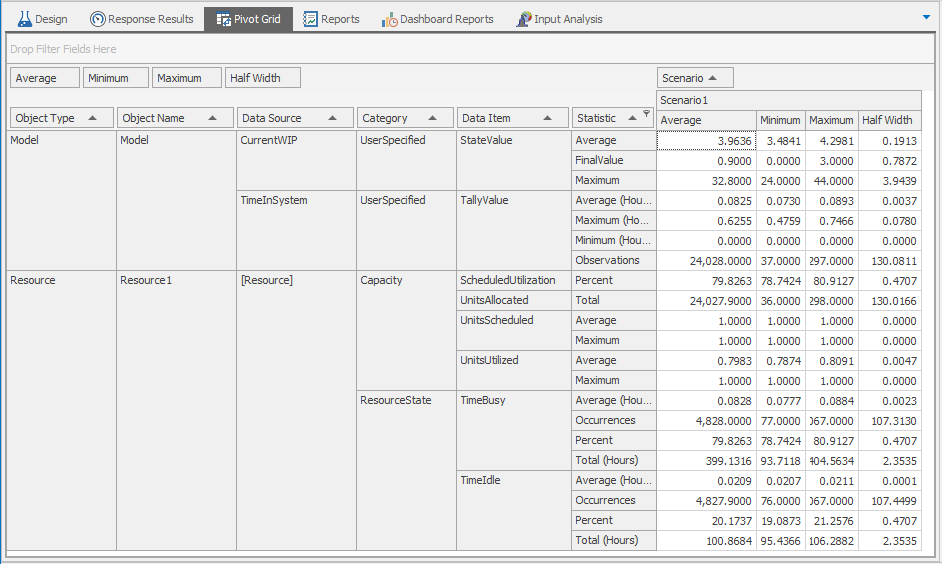

To test the model, create an Experiment by clicking on the New Experiment icon in the Project Home ribbon. Figure 4.18 shows the Pivot Grid results for a run of 10 replications of the model using a 500 hour warm-up period for each replication. Notice that the report includes the UserSpecified category including the CurrentWIP and TimeInSystem statistics. Unlike the ModelEntity statistics NumberInSystem and TimeInSystem that Simio collected automatically in the Standard Library object model from Section 4.2, we explicitly told Simio to collect these statistics in the process model. Understanding user-specified statistics is important, as it’s very likely that you’ll want more than the default statistics as your models become larger and more complex. The CurrentWIP statistic is an example of a time-dependent statistic. Here, we defined a Simio state (step 4), used the process logic to update the value of the state when necessary (step 10 to increment and step 14 to decrement), and told Simio to keep track of the value as it evolves over simulated time and to report the summary statistics (of \(\widehat{L}\), in this case — step 4). The TimeInSystem statistic is an example of an observational or tally statistic. In this case, each arriving entity contributes a single observation (the time that entity spends in the system) and Simio tracks and reports the summary statistics for these values (\(\widehat{W}\), in this case). Step 7 sets up this statistic and step 15 records each observation.

Figure 4.18: Results from Model 4-2.

Another thing to note about our processes model is that it runs significantly faster than the corresponding Standard Library objects model (to see this, simply increase the run length for both models and run them one after another). The speed difference is due to the overhead associated with the additional functionality provided by the Standard Library objects (such as automatic collection of statistics, animation, collision detection on paths, resource failures, etc.).

As mentioned above, most Simio models that you build will use the Standard Library objects and it’s unlikely that you’ll build complete models using only Simio processes. However, processes are fundamental to Simio and it is important to understand how they work. We’ll revisit this topic in more detail in Section 5.1.4, but for now we’ll return to our initial model using the Standard Library objects.

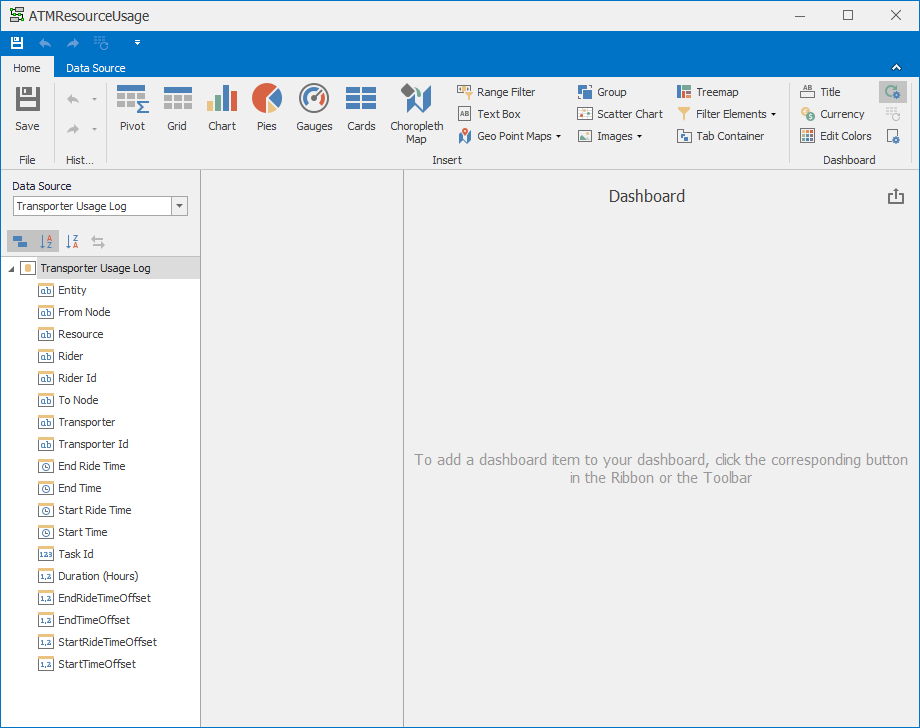

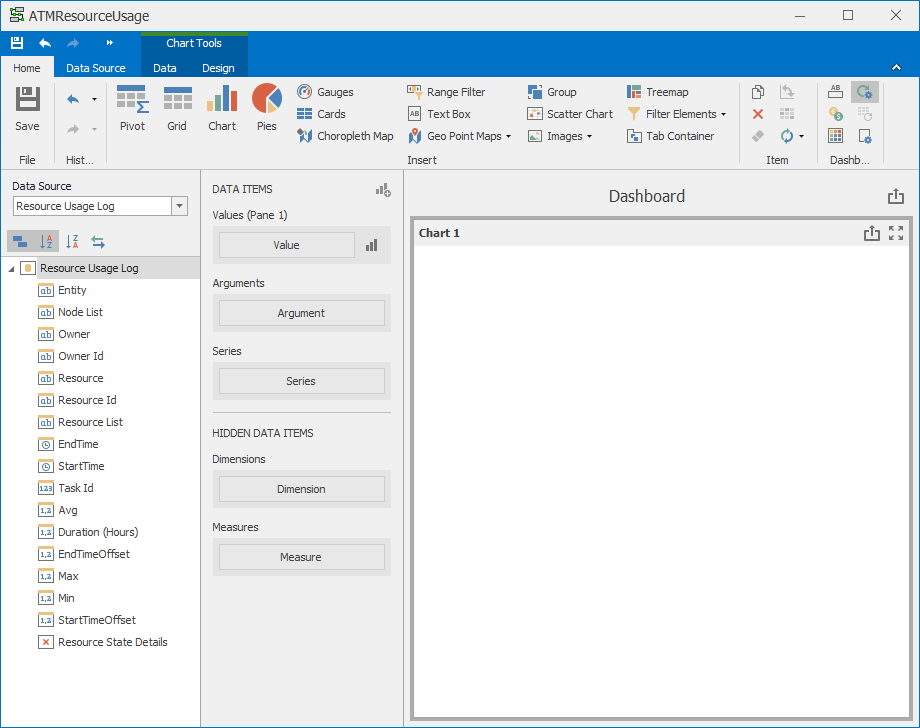

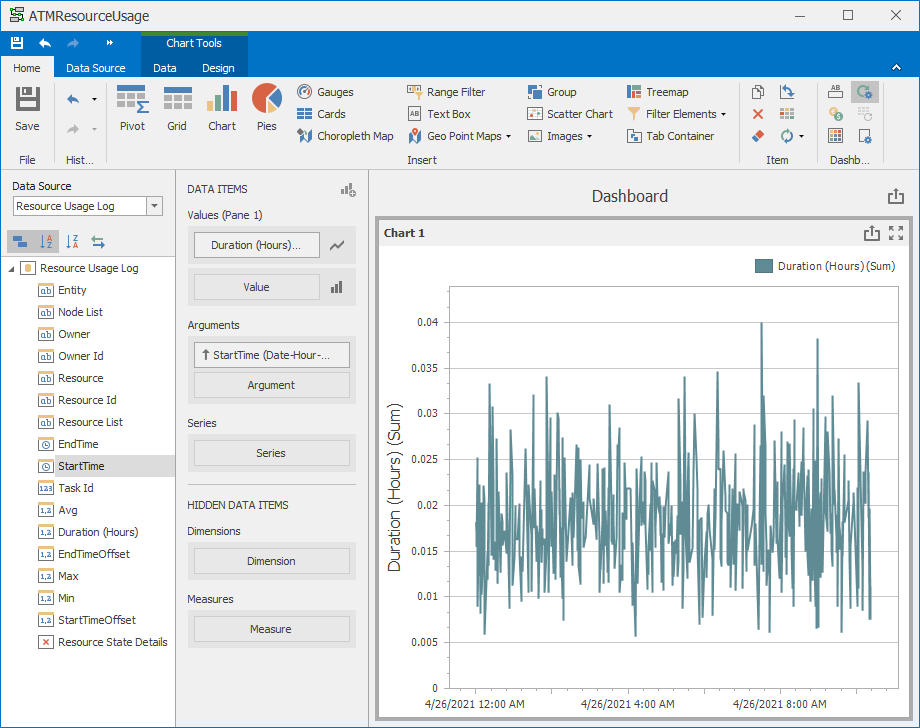

4.4 Model 4-3: Automated Teller Machine (ATM)

In developing our initial Simio models, we focused on an arbitrary queueing system with entities and servers — very boring. Our focus for this section and for Section 4.8 is to add some context to the models so that they’ll more closely represent the types of “real” systems that simulation is used to analyze. We’ll continue to enhance the models over the remaining chapters in this Part of the book as we continue to describe the general concepts of simulation modeling and explore the features of Simio. In Models 4-1 and 4-2 we used observations from the exponential distribution for entity inter-arrival and service times. We did this so that we could exploit the mathematical “niceness” of the resulting \(M/M/1\) queueing model in order to demonstrate the basics of randomness in simulation. However, in many modeling situations, entity inter-arrivals and service times don’t follow nice exponential distributions. Simio and most other simulation packages can sample from a wide variety of distributions to support general modeling. Models 4-3 and 4-4 will demonstrate the use of a triangular distribution for the service times, and the models in Chapter 5 will demonstrate the use of many of the other standard distributions. Section 6.1 discusses how to specify such input probability distributions in practice so that your simulation model will validly represent the reality you’re modeling.

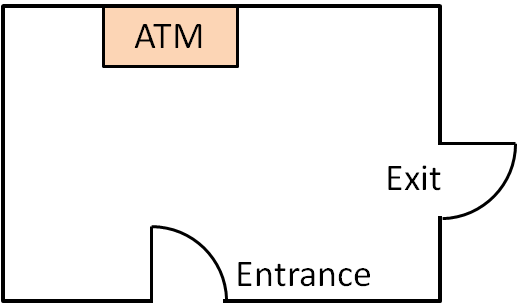

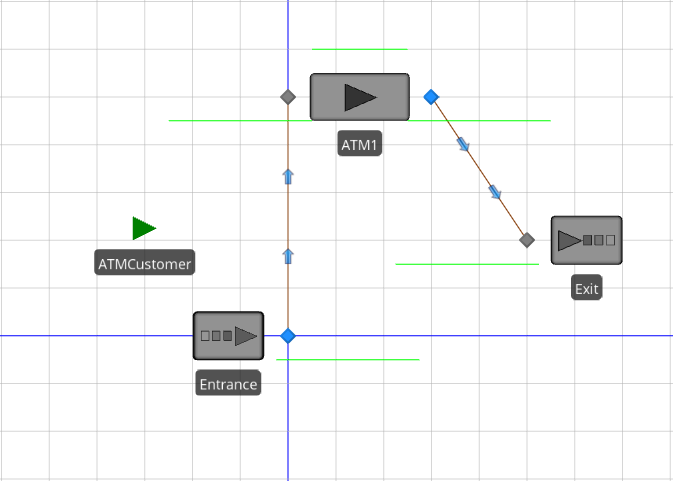

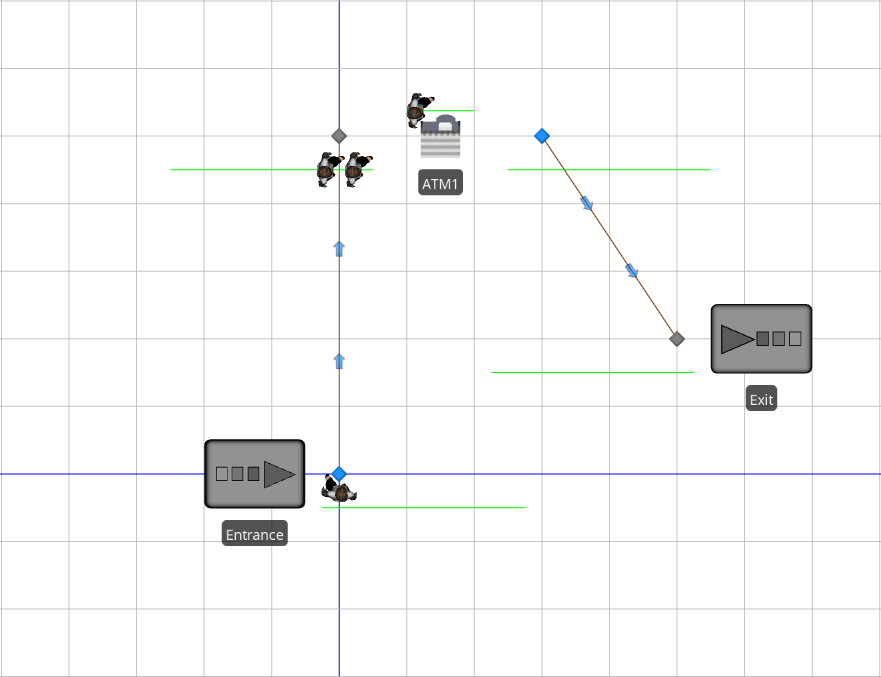

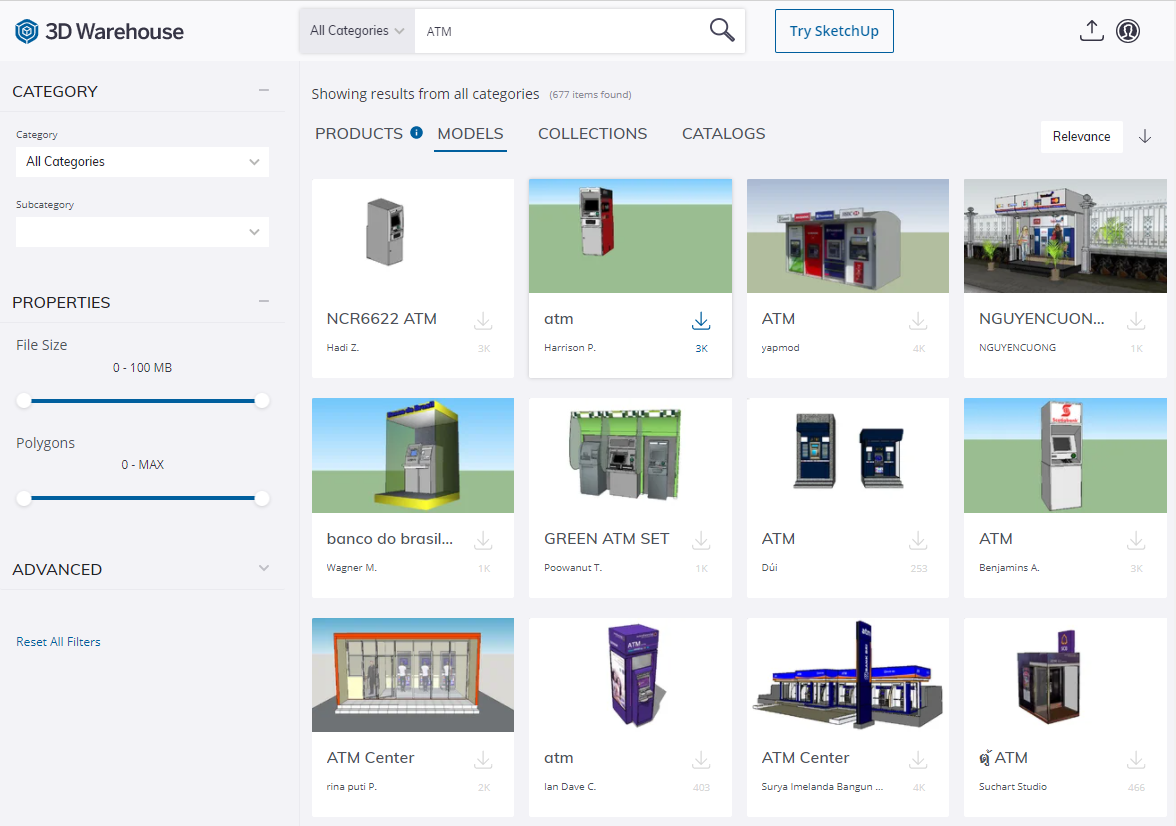

Model 4-3 models the automated teller machine (ATM) shown in Figure 4.19. Customers enter through the door marked Entrance, walk to the ATM, use the ATM, and walk to the door marked Exit and leave. For this model, we’ll assume that the room containing the ATM is large enough to handle any number of customers waiting to use the ATM (this will make our model a bit easier, but is certainly not required and we’ll revisit the use of limited-capacity queues in future chapters). With this assumption, we basically have a single-server queueing model similar to the one shown in Figure 4.1. As such, we’ll start with Model 4-1 and modify the model to get our ATM model (be sure to use the Save Project As option to save Model 4-3 initially so that you don’t over-write your file for Model 4-1). The completed ATM model (Model 4-3) is shown in Figure 4.20.

Figure 4.19: ATM example.

Figure 4.20: Model 4-3: ATM example.

The required modifications are as follows:

Update the object names to reflect the new model context (

ATMCustomerfor entities,Entrancefor the Source object,ATM1for the Server object, andExitfor the Sink object);Rearrange the model so that it “looks” like the figure;

Change the Connector and entity objects so that the model includes the customer walk time; and

Change the ATM processing-time distribution so that the ATM transaction times follow a triangular distribution with parameters (0.25, 1.00, 1.75) minutes (that is, between 0.25 and 1.75 minutes, with a mode of 1.00 minute).

Updating the object names doesn’t affect the model’s running characteristics or performance, but naming the objects can greatly improve model readability (especially for large or complicated models). As such, you should get into the habit of naming objects and adding meaningful descriptions using the Description property. Renaming objects can be done by either selecting the object, hitting the F2 key, and typing the new name; or by editing the Name property for the object. Rearranging the model to make it look like the system being modeled is very easy — Simio maintains the connections between objects as you drag the object around the model. Note that in addition to moving objects, you can also move the individual object input and output nodes.

In our initial queueing model (Model 4-1) we assumed that entities simply “appeared’’ at the server upon arrival. The Simio Connector object supported this type of entity transfer. This is clearly not the case in our ATM model where customers walk from the entrance to the ATM and from the ATM to the exit (most models of”real” systems involves some type of similar entity movement). Fortunately, Simio provides several objects from the Standard Library to facilitate modeling entity movements:

Connector — Transfers entities between objects in zero simulation time (i.e., instantly, at infinite speed);

Path — Transfers entities between objects using the distance between objects and entity speed to determine the movement time;

TimePath — Transfers entities between objects using a user-specified movement-time expression; and

Conveyor — Models physical conveyors.

We’ll use each of these methods over the next few chapters, but we’ll use Paths for the ATM model (note that the Simio Reference Guide, available via the F1 key or the ? icon in the upper right of the Simio window, provides detailed explanations of all of these objects). Since we’re modifying Model 4-1, the objects are already connected using Connectors. The easiest way to change a Connector to a Path is to right-click on the Connector and choose the Path option from the Convert to Type sub-menu. This is all that’s required to change the connection type. Alternatively, we could delete the Connector object and add the Path object manually by clicking on the Path in the Standard Library and then selecting the starting and ending nodes for the Path.