Simio and Simulation: Modeling, Analysis, Applications - 7th Edition

Chapter 6 Input Analysis

In the simulation models of Chapters 3-5, there were many places where we needed to specify probability distributions for input, as part of the modeling process. In Model 3-3 we said that the demand for hats was a discrete uniform random variable on the integers {1000, 1001, …, 5000}. In Models 3-5, 4-1, and 4-2, we said that interarrival times to the queueing system had an exponential distribution with mean 1.25 minutes, and that service times were exponentially distributed with mean 1 minute; in Models 4-3 and 4-4, we changed that service-time distribution to be triangularly distributed between 0.25 and 1.75 with a mode of 1.00, which is probably a more realistic shape for a service-time distribution than is the exponential (which has a mode of 0). The models in Chapter 5 used exponential, triangular, and uniform input distributions to represent interarrival, processing, inspection, and rework times. And in the models in the chapters to follow there will be many places where we need to specify probability distributions for a wide variety of inputs that are probably best modeled with some uncertainty, rather than as fixed constants. This raises two questions:

How do you specify such input distributions in practice (as opposed to just making them up, which we authors get to do, after some experimentation since we’re trying to demonstrate some ideas and make some points)?

Once you’ve somehow specified these input distributions, how do you “generate” values from them to make your simulation go?

We’ll discuss both in this chapter, but mostly the first question since it’s something that you will have to do as part of model building. The second question is fortunately pretty much covered within simulation software like Simio; still, you need to understand the basics of how those things work so you can properly design and analyze your simulation experiments.

We do assume in this chapter (and for this whole book in general) that you’re already comfortable with the basics of probability and statistics. This includes:

All the probability topics itemized at the beginning of Chapter 2.

Random sampling, and estimation of means, variances, and standard deviations.

Independent and identically distributed (IID) data observations and RVs.

Sampling distributions of estimators of means, variances, and standard deviations.

Point estimation of means, variances, and standard deviations, including the idea of unbiasedness.

Confidence intervals for means and other population parameters, and how they’re interpreted.

Hypothesis tests for means and other population parameters (though we’ll briefly discuss in this chapter a specific class of hypothesis tests we need, goodness-of-fit tests), including the concept of the p-value (a.k.a. observed significance level) of a hypothesis test.

If you’re rusty on any of these concepts, we strongly suggest that you now dust off your old probability/statistics books and spend some quality time reviewing before you read on here. You don’t need to know by heart lots of formulas, nor have memorized the normal or \(t\) tables, but you do need familiarity with the ideas. There’s certainly a lot more to probability and statistics than the above list, like Markov and other kinds of stochastic processes, regression, causal path analysis, data mining, and many, many other topics, but what’s on this list is all we’ll really need.

In Section 6.1 we’ll discuss methods to specify univariate input probability distributions, i.e., when we’re concerned with only one scalar variate at a time, independently across the model. Section 6.2 surveys more generally the kinds of inputs to a simulation model, including correlated, multivariate, and process inputs (one important example of which is a nonstationary Poisson process to represent arrivals with a time-varying rate). In Section 6.3 we’ll go over how to generate random numbers (continuous observations distributed uniformly between 0 and 1), which turns out to be a lot trickier than many people think, and the (excellent) random-number generator that’s built into Simio. Section 6.4 describes how those random numbers are transformed into observations (or realizations or draws) from the probability distributions and processes you decided to use as inputs for your model. Finally, Section 6.5 describes the use of Simio’s Input Parameters and the associated input analysis that they support.

6.1 Specifying Univariate Input Probability Distributions

In this section we’ll discuss the common task of how to specify the distribution of a univariate random variable for input to a simulation. Section 6.1.1 describes our overall tactic, and Section 6.1.2 delineates options for using observed real-world data. Sections 6.1.3 and 6.1.4 go through choosing one or more candidate distributions, and then fitting these distributions to your data; Section 6.1.5 goes into a bit more detail on assessing whether fitted distributions really are a good fit. Section 6.1.6 discusses some general issues in distribution specification.

6.1.1 General Approach

Most simulations will have several places where we need to specify probability distributions to represent random numerical inputs, like the demand for hats, interarrival times, service times, machine up/down times, travel times, or batch sizes, among many other examples. So for each of these inputs, just by themselves, we need to specify a univariate probability distribution (i.e., for just a one-dimensional RV, not multivariate or vector-valued RVs). We also typically assume that these input distributions are independent of each other across the simulation model (though we’ll briefly discuss in Section 6.2.2 the possibility and importance of allowing for correlated or vector-valued random inputs to a simulation model).

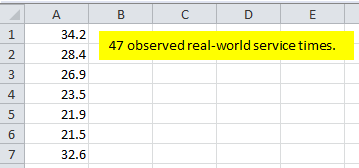

For example, in most queueing-type simulations we need to specify distributions for service times, which could represent processing a part at a machine in a manufacturing system, or examining a patient in an urgent-care clinic. Typically we’ll have real-world data on these times, either already available or collected as part of the simulation project. Figure 6.1 shows the first few rows of the Excel spreadsheet file Data_06_01.xls (downloadable from the “students” section of the book’s website as described in Section C.4), which contains a sample of 47 service times, in minutes, one per row in column A. We’ll assume that our data are IID observations on the actual service times, and that they were taken during a stable and representative period of interest. We seek a probability distribution that well represents our observed data, in the sense that a fitted distribution will “pass” statistical goodness-of-fit hypothesis tests, illustrated below. Then, when we run the simulation, we’ll “generate” random variates (observations or samples or realizations of the underlying service-time RV) from this fitted distribution to drive the simulation.

Figure 6.1: Data file with 47 observed service times (in minutes).

6.1.2 Options for Using Observed Real-World Data

You might wonder why we don’t take what might seem to be the more natural approach of just using our observed real-world service times directly by reading them right into the simulation, rather than taking this more indirect approach of fitting a probability distribution, and then generating random variates from that fitted distribution to drive the simulation. There are several reasons:

As you’ll see in later chapters, we typically want to run simulations for a very long time and often repeat them for a large number of IID replications in order to be able to draw statistically valid and precise conclusions from the output, so we’d simply run out of real-world input data in short order.

The observed data in Figure 6.1 and in

Data_06_01.xlsrepresent only what happened when the data were taken at that time. At other times, we would have observed different data, perhaps just as representative, and in particular could have gotten a different range (minimum and maximum) of data. If we were to use our observed data directly to drive the simulation, we’d be stuck with the values we happened to get, and could not generate anything outside our observed range. Especially when modeling service times, large values, even if infrequent, can have great impact on typical queueing congestion measures, like average time in system and maximum queue length, so by “truncating” the possible simulated service times on the right, we could be biasing our simulation results.Unless the sample size is quite large, the observed data could well leave gaps in regions that should be possible but in which we just didn’t happen to get observations that time, so such values could never happen during the simulation.

So, there are several reasons why fitting a probability distribution, and then generating random variates from it to drive the simulation, is often preferred over using the observed real-world data directly. One specific situation where driving the simulation directly from the observed real-world data might be a good idea is in model validation, to assess whether your simulation model accurately reproduces the performance of the real-world system. Doing this, though, would require that you have the output data from the real-world system against which to match up your simulation outputs, which might not be the case if your data-collection efforts were directed at specifying input distributions. However, some recent work ((Song, Nelson, and Pegden 2014), (Song and Nelson 2015)) has shown that there are cases where sampling from sets of observed data is the preferred approach to fitting a probability distribution. We will briefly discuss this topic and the Simio method for sampling from observed data sets in Section 6.5. Our initial job, then, is to figure out which probability distribution best represents our observed data.

6.1.3 Choosing Probability Distributions

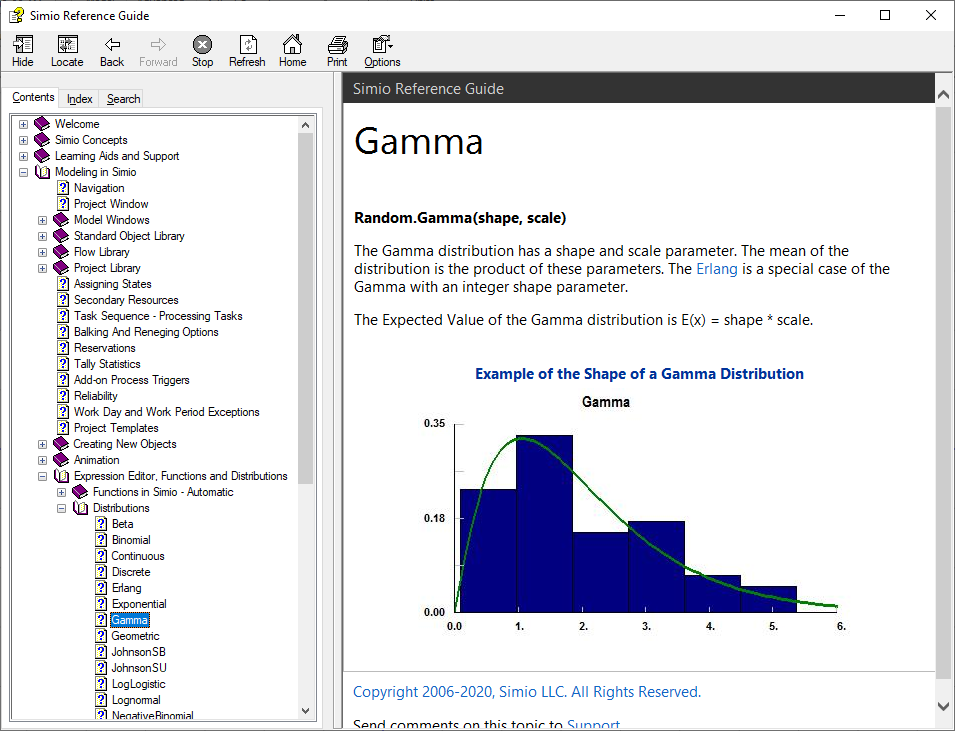

Of course, there are many probability distributions from which to choose in order to model random inputs for your model, and you’re probably familiar with several. Common continuous distributions include the normal, exponential, continuous uniform, and triangular; on the discrete side you may be familiar with discrete uniform, binomial, Poisson, geometric, and negative binomial. We won’t provide a complete reference on all these distributions, as that’s readily available elsewhere. The Simio Reference Guide (in Simio itself, tap the F1 key or click the ? icon near the upper right corner) provides basic information on the approximately 20 distributions supported (i.e., which you can specify in your Simio model and Simio will generate random variates from them), via the Simio Reference Guide Contents \(\rightarrow\) Modeling in Simio \(\rightarrow\) Expression Editor, Functions and Distributions \(\rightarrow\) Distributions, to select the distribution you want. Figure 6.2 shows this path on the left, and the gamma-distribution entry on the right, which includes a plot of the PDF (the smooth continuous line since in this case it’s a continuous distribution), a histogram of possible data that might be well fitted by this distribution, and some basic information about it at the top, including the syntax and parameterization for it in Simio. There are also extended discussions and chapters describing probability distributions useful for simulation input modeling, such as (Seila, Ceric, and Tadikamalla 2003) and (Law 2015); moreover, entire multi-volume books (e.g., (Johnson, Kotz, and Balakrishnan 1994), (Johnson, Kotz, and Balakrishnan 1995), (Johnson, Kemp, and Kotz 2005), (Evans, Hastings, and Peacock 2000)) have been written describing in great depth many, many probability distributions. Of course, numerous websites offer compendia of distributions. A search on “probability distributions” returned over 69 million results, such as https:\\en.wikipedia.org\wiki\List_of_probability_distributions; this page in turn has links to web pages on well over 100 specific univariate distributions, divided into categories based on range (or support) for both discrete and continuous distributions, like https:\\en.wikipedia.org\wiki\Gamma_distribution, to take the same gamma-distribution case as in Figure 6.2. Be aware that distributions are not always parameterized the same way, so you need to take care with what matches up with Simio; for instance, even the simple exponential distribution is sometimes parameterized by its mean \(\beta > 0\), as it is in Simio, but sometimes with the rate \(\lambda = 1/\beta\) of the associated Poisson process with events happening at mean rate \(\lambda\), so inter-event times are exponential with mean \(\beta = 1/\lambda\).

Figure 6.2: Gamma-distribution entry in the Simio Reference Guide.

With such a bewildering array of possibly hundreds of probability distributions, how do you choose one? First of all, it should make qualitative sense. By that we mean several things:

It should match whether the input quantity is inherently discrete or continuous. A batch size should not be modeled as a continuous RV (unless perhaps you generate it and then round it to the nearest integer), and a time duration should generally not be modeled as a discrete RV.

Pay attention to the range of possible values the RV can take on, in particular whether it’s finite or infinite on the right and left, and whether that’s appropriate for a particular input. As mentioned above, whether the right tail of a distribution representing service times is finite or infinite can matter quite a lot for queueing-model results like time (or number) in queue or system.

Related to the preceding point, you should be wary of using distributions that have infinite tails both ways, in particular to the left. This of course includes the normal distribution (though you know it and love it) since its PDF always has an infinite left (and right) tail, so will always extend to the left of zero and will thus always have a positive probability of generating a negative value. This makes no sense for things like service times and other time-duration inputs. Yes, it’s true that if the mean is three or four standard deviations above zero, then the probability of realizing a negative value is small, as basic statistics books call it and sometimes say you can thus just ignore it. The actual probabilities of getting negative values are 0.00134990 and 0.00003167 for, respectively, the mean’s being three and four standard deviations above zero. But remember, this is computer simulation where we could easily generate hundreds of thousands, even millions of random variates from our input probability distributions, so it could well happen that you’ll eventually get a negative one, which, depending on how that’s handled in your simulation when it doesn’t make sense, could create undesired or even erroneous results (Simio actually terminates your run and generates an error message for you if this happens, which is the right thing for it to do). The above two probabilities are respectively about one in 741 and one in 31,574, hardly out of the realm of possibility in simulation. If you find yourself wanting to use the normal distribution due to its shape (and maybe being a good fit to your data), know that there are other distributions, notably the Weibull, that can match the shape of the normal very closely, but have no tail to the left of zero in their PDFs, so have no chance of generating negative values.

So getting discrete vs. continuous right, and the range right, is a first step and will narrow things down.

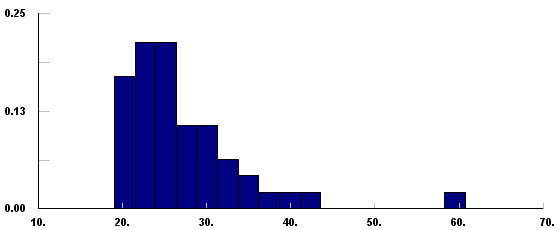

But still, there could be quite a few distributions remaining from which to choose, so now you need to look for one whose shape (PMF for discrete or PDF for continuous) resembles, at least roughly, the shape of a histogram of your observed real-world data. The reason for this is that the histogram is an empirical graphical estimate of the true underlying PMF or PDF of the data. Figure 6.3 shows a histogram (made with part of the Stat::Fit\(^{(R)}\) distribution-fitting software, discussed more below) of the 47 observed service times from Figure 6.1 and the file Data_06_01.xls. Since this is a service time, we should consider a continuous distribution, and one with a finite left tail (to avoid generating negative values), and perhaps an infinite right tail, in the absence of information placing an upper bound on how large service times can possibly be. Browsing through a list of PDF plots of distributions (such as the Simio Reference Guide cited above and in Simio via the F1 key or the ? icon near the upper right corner), possibilities might be Erlang, gamma (a generalization of Erlang), lognormal, Pearson VI, or Weibull. But each of these has parameters (like shape and scale, for the gamma and Weibull) that we need to estimate; we also need to test whether such distributions, with their parameters estimated from the data, provide an acceptable fit, i.e., an adequate representation of our data. This estimating and goodness-of-fit testing is what we mean by fitting a distribution to observed data.

Figure 6.3: Histogram of the observed 47 service-times (in minutes) in data file.

6.1.4 Fitting Distributions to Observed Real-World Data

Since there are several stand-alone third-party packages available that do such distribution fitting and subsequent goodness-of-fit testing, Simio does not provide this capability internally. One such package is Stat::Fit\(^{(R)}\) from Geer Mountain Software Corporation (www.geerms.com), which we chose to discuss in part since it has a free textbook version available for download as described in Appendix C. Another reason we chose Stat::Fit is that it can export the specification of the fitted distribution in the proper parameterization and syntax for simple copy/paste directly into Simio. A complete Stat::Fit manual comes with the textbook-version download as a .pdf file, so we won’t attempt to give a complete description of Stat::Fit’s capabilities, but rather just demonstrate some of its basics. Other packages include the Distribution Fitting tool in @RISK\(^{(R)}\) (Lumivero Corporation, https://lumivero.com/, formerly Palisade Corporation), and ExpertFit\(^{(R)}\) (Averill M. Law & Associates, http://www.averill-law.com).

See Appendix Section C.4.3 for instructions to get and install the textbook version of Stat::Fit.

The Stat::Fit help menu is based on Windows Help, an older system no longer directly supported in Windows Vista or later. The Microsoft web site support.microsoft.com/kb/917607 has a full explanation as well as links to download an operating-system-specific version of WinHlp32.exe that should solve the problem and allow you to gain access to Stat::Fit’s help system. Remember, there’s an extensive .pdf manual included in the same .zip file for Stat::Fit that you can download from this book’s web site.

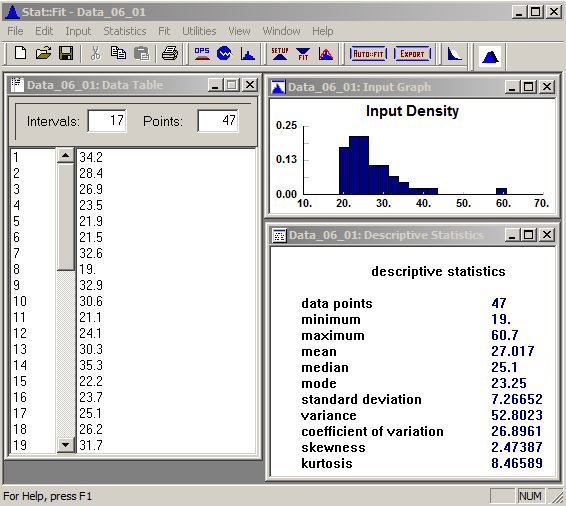

Figure 6.4 shows Stat::Fit with our service-time data pasted into the Data Table on the left. You can just copy/paste your observed real-world data directly from Excel; only the first 19 of the 47 values present appear, but all 47 are there and will be used by Stat::Fit. In the upper right is the histogram of Figure 6.3, made via the menu path Input \(\rightarrow\) Input Graph (or the Graph Input button on the toolbar); we changed the number of intervals from the default 7 to 17 (Input \(\rightarrow\) Options, or the Input Options button labeled just OPS on the toolbar). In the bottom right window are some basic descriptive statistics of our observed data, made via the menu path Statistics \(\rightarrow\) Descriptive.

Figure 6.4: Stat::Fit with data file, histogram, and descriptive statistics (data in minutes).

To view PMFs and PDFs of the supported distributions, follow the menu path Utilities \(\rightarrow\) Distribution Viewer and then select among them via the pull-down field in the upper right of that window. Note that the free textbook version of Stat::Fit includes only seven distributions (binomial and Poisson for discrete; exponential, lognormal, normal, triangular, and uniform for continuous) and is limited to 100 data points, but the full commercial version supports 33 distributions and allows up to 8000 observed data points.

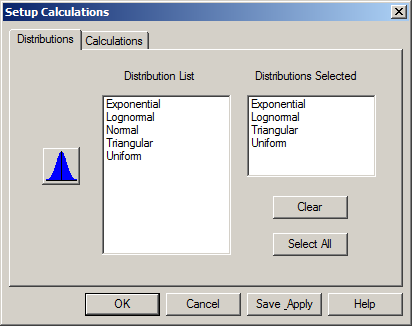

We want a continuous distribution to model our service times, and don’t want to allow even a small chance of generating a negative value, so the qualitatively sensible choices from this list are exponential, lognormal, triangular, and uniform (from among those in the free textbook version — the full version, of course, would include many other possible distributions). Though the histogram shape certainly would seem to exclude uniform, we’ll include it anyway just to demonstrate what happens if you fit a distribution that doesn’t fit very well. To select these four distributions for fitting, follow the menu path Fit \(\rightarrow\) Setup, or click the Setup Calculations button (labeled just SETUP) on the toolbar, to bring up the Setup Calculations window. In the Distributions tab (Figure 6.5), click one at a time on the distributions you want to fit in the Distribution List on the left, which copies them one by one to the Distributions Selected list on the right; if you want to remove one from the right, just click on it there.

Figure 6.5: Stat::Fit Setup Calculations window, Distributions tab.

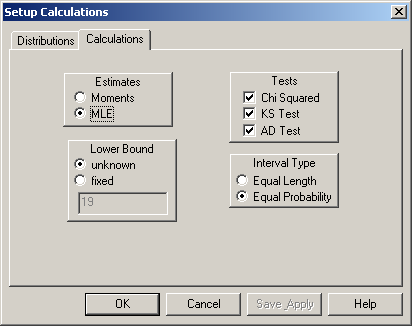

The Calculations tab (Figure 6.6) has choices about the method to form the Estimates (MLE, for Maximum Likelihood Estimation, is recommended), and the Lower Bound for the distributions (which you can select as unknown and allow Stat::Fit to choose one that best fits your data, or specify a fixed lower bound if you want to force that). The Calculations tab also allows you to pick the kinds of goodness-of-fit Tests to be done: Chi Squared, Kolmogorov-Smirnov, or Anderson-Darling, and, if you selected the Chi Squared test, the kind of intervals that will be used (Equal Probability is usually best). See Section 6.1.5 for a bit more on assessing goodness of fit; for more detail see (Banks et al. 2005) or (Law 2015).

Figure 6.6: Stat::Fit Setup Calculations window, Calculations tab.

The Lower Bound specification requires a bit more explanation. Many distributions, like exponential and lognormal on our list, usually have zero as their customary lower bound, but it may be possible to achieve a better fit to your data with a non-zero lower bound, essentially sliding the PDF left or right (usually right for positive data like service times) to match up better with the histogram of your data. If you select fixed here you can decide yourself where you’d like the lower end of your distribution to start (in which case the field below it becomes active so you can enter your value — the default is the minimum value in your data set, and entering 0 results in the customary lower bound). But if you select unknown here the numerical field becomes inactive since this selection lets Stat::Fit make this determination, which will usually be a bit less than the minimum observation in your data set to get a good fit. Click Save Apply to save and apply all your selections.

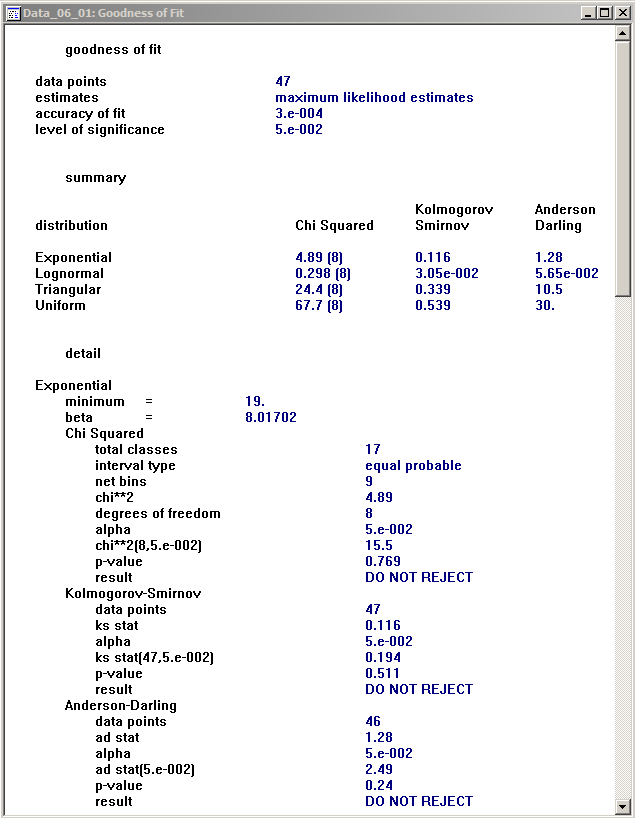

To fit the four distributions you chose to represent the service-time data, follow the menu path Fit \(\rightarrow\) Goodness of Fit, or click the Fit Data button (labeled just FIT) in the toolbar, to produce a detailed window of results, the first part of which is in Figure 6.7.

Figure 6.7: Stat::Fit Goodness of Fit results window (detail shown for only the exponential distribution; similar results for the lognormal, triangular, and uniform distributions are below these when scrolling down).

A brief summary is at the top, with the test statistics for all three tests applied to each distribution; for all of these tests, a smaller value of the test statistic indicates a better fit (the values in parentheses after the Chi Squared test statistics are the degrees of freedom). The lognormal is clearly the best fit, followed by exponential, then triangular, and uniform is the worst (largest test statistics).

In the “detail” section further down, you can find, well, details of the fit for each distribution in turn; Figure 6.7 shows these for only the exponential distribution, and the other three distributions’ fit details can be seen by scrolling down in this window. The most important parts of the detail report are the \(p\)-values for each of the tests, which for the fit of the exponential distribution is 0.769 for the Chi Squared test, 0.511 for the Kolmogorov-Smirnov test, and 0.24 for the Anderson-Darling test, with the “DO NOT REJECT” conclusion just under each of them. Recall that the \(p\)-value (for any hypothesis test) is the probability that you would get a sample more in favor of the alternate hypothesis than the sample that you actually got, if the null hypothesis is really true. For goodness-of-fit tests, the null hypothesis is that the candidate distribution adequately fits the data. So large \(p\)-values like these (recall that \(p\)-values are probabilities, so are always between 0 and 1) indicate that it would be quite easy to be more in favor of the alternate hypothesis than we are; in other words, we’re not very much in favor of the alternate hypothesis with our data, so that the null hypothesis of an adequate fit by the exponential distribution appears quite reasonable.

Another way of looking at the \(p\)-value is in the context of the more traditional hypothesis-testing setup, where we pre-choose \(\alpha\) (typically small, between 0.01 and 0.10) as the probability of a Type I error (erroneously rejecting a true null hypothesis), and we reject the null hypothesis if and only if the \(p\)-value is less than \(\alpha\); the \(p\)-values for all three of our tests for goodness of the exponential fit are well above any reasonable value of \(\alpha\), so we’re not even close to rejecting the null hypothesis that the exponential fits well. If you’re following along in Stat::Fit (which you should be), and you scroll down in this window you’ll see that for the lognormal distribution the \(p\)-values of these three tests are even bigger — they show up as 1, though of course they’re really a bit less than 1 before roundoff error, but in any case provide no evidence at all against the lognormal distribution’s providing a good fit. But, if you scroll further on down to the triangular and uniform fit details, the \(p\)-values are tiny, indicating that the triangular and uniform distributions provide terrible fits to the data (as we already knew from glancing at the histogram in the case of the uniform, but maybe not so much for the triangular).

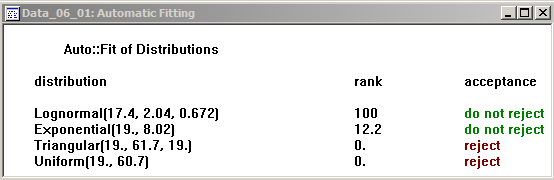

If all you want is a quick summary of which distributions might fit your data and which ones probably won’t, you could just do Fit \(\rightarrow\) Auto::Fit, or click the Auto::fit button in the toolbar, to get first the Auto::Fit dialogue (not shown) where you should select the continuous distributions and lower bound buttons for our service times (since we don’t want an infinite left tail), then OK to get the Automatic Fitting results window in Figure 6.8.

Figure 6.8: Stat::Fit Auto::fit results.

This gives the overall acceptance (or not) conclusion for each distribution, referring to the null hypothesis of an adequate fit, without the \(p\)-values, and in addition the parameters of the fitted distributions (with Stat::Fit’s parameterization conventions, which, as noted earlier, are not universally agreed to, and so in particular may not agree with Simio’s conventions in every case). The rank column is an internal score given by Stat::Fit, with larger ranks indicating a better fit (so lognormal is the best, consistent with the \(p\)-value results from the detailed report).

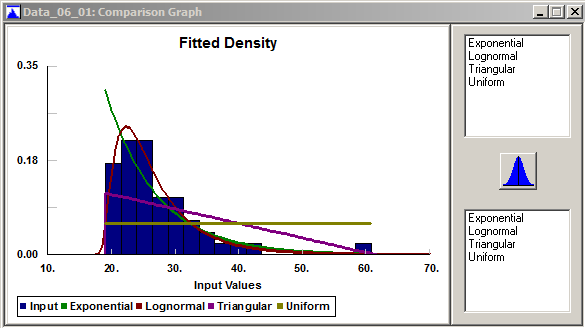

A graphical comparison of the fitted densities against the histogram is available via Fit \(\rightarrow\) Result Graphs \(\rightarrow\) Density, or the Graph Fit button on the toolbar, shown in Figure 6.9.

Figure 6.9: Stat::Fit overlays of the fitted densities over the data histogram.

By clicking on the distributions in the upper right you can overlay them all (in different colors per the legend at the bottom); you can get rid of them by clicking in the lower-right window. This provides our favorite goodness-of-fit test, the eyeball test, and provides a quick visual check (so we see just how ridiculous the uniform distribution is for our service-time data, and the triangular isn’t much better).

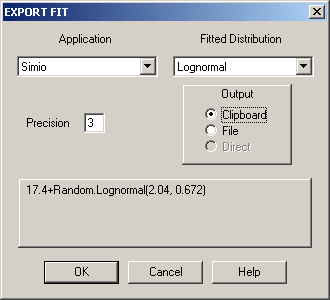

The final step is to translate the results into the proper syntax for copying and direct pasting into Simio expression fields. This is on File \(\rightarrow\) Export \(\rightarrow\) Export fit, or the Export toolbar button, and brings up the EXPORT FIT dialog shown in Figure 6.10.

Figure 6.10: Stat::Fit EXPORT FIT dialogue.

The top left drop-down contains a list of several simulation-modeling packages, Simio among them, and the top right drop-down shows the distributions you’ve fit. Choose the distribution you want, let’s say the fitted lognormal, then select the Clipboard radio button just below and see the valid Simio expression 17.4+Random.Lognormal(2.04, 0.672) in the panel below. This expression now occupies the Windows clipboard, so if you go into the Simio application at this point and paste CTRL-V into an expression field that accepts a probability distribution, you’re done. Note that Stat::Fit is recommending that we shift the lognormal distribution up (to the right) by 17.4 in order to get a better fit to our data, rather than stay with the customary lower end of zero for the lognormal distribution; this shift value of 17.4 is a little less than the minimum value of 19.0 in the data set, so that the fitted lognormal density function will “hit zero” on the left just a bit to the left of the minimum data value (so when generating variates, the smallest possible value will be 17.4).

You can save all your Stat::Tools work, including the data, distribution selection, results, and graphics. As usual, do File \(\rightarrow\) Save or Save As, or the usual Save button. The default filename extension is .sfp (for Stat::Fit Project); we chose the file name Data_06_01.sfp.

6.1.5 More on Assessing Goodness of Fit

In Section 6.1.4 we gave an overview of fitting distributions to data with Stat::Fit, and testing how well the various candidate distributions do in terms of representing our observed data set. There are many ways to assess goodness of fit, far more than we can cover in depth; see (Banks et al. 2005) or (Law 2015) for more detail. But in this section we’ll describe what’s behind some of the main methods we described above, including formal hypothesis tests as well as informal visual heuristics. We won’t treat the Anderson-Darling test; see (Banks et al. 2005) or (Law 2015).

6.1.5.1 Chi-Squared Goodness-of-Fit Test

In Figure 6.9, what we’re looking for in a good fit is good (or at least reasonable) agreement between the histogram (the blue bars) and the PDFs of the fitted distributions (the lines of various colors in the legend at the bottom); for this discussion we’ll assume we’re fitting a continuous distribution. While this desire is intuitive, there’s also a mathematical reason for it.

Let \(n\) be the number of real-world observed data points (so \(n = 47\) in our example), and let \(k\) be the number of intervals defining the histogram along the horizontal axis (so \(k\) = 17 in our example); for now, we’ll assume that the intervals are all of equal width \(w\) as they would be in a histogram, though they don’t need to be for the chi-squared test, and in many cases they shouldn’t be \(\ldots\) more on that below. Let the left endpoints of the \(k\) intervals be denoted \(x_0, x_1, x_2, \ldots, x_{k-1}\) and let \(x_k\) be the right endpoint of the \(k\)th interval, so the \(j\)th interval is \(\left[x_{j-1}, x_j\right)\) for \(j = 1, 2, \ldots, k\). If \(O_j\) of our \(n\) observations fall in the \(j\)th interval (the observed frequency of the data in that interval), then \(O_j/n\) is the proportion of the data falling into the \(j\)th interval.

Now, let \(\widehat{f}(x)\) be the PDF of the fitted distribution under consideration. If this is really the right PDF to represent the data, then the probability that a data point in our observed sample falls in the \(j\)th interval \(\left[x_{j-1}, x_j\right)\) would be \[p_j = \int_{x_{j-1}}^{x_j} \widehat{f}(x) dx,\] and this should be (approximately) equal to the observed proportion \(O_j/n\). So if this is actually a good fit, we would expect \(O_j/n \approx p_j\) for all intervals \(j\). Multiplying this through by \(n\), we would expect that \(O_j \approx np_j\) for all intervals \(j\), i.e., the observed and expected (if \(\widehat{f}\) really is the right density function for our data) frequencies within the intervals should be “close” to each other; if they’re in substantial disagreement for too many intervals, then we would suspect a poor fit. This is really what our earlier eyeball test is doing. But even in the case of a really good fit, we wouldn’t demand \(O_j = n p_j\) (exactly) for all intervals \(j\), simply due to natural fluctuation in random sampling. To formalize this idea, we form the chi-squared test statistic \[\chi^2_{k-1} = \sum_{j=1}^{k}\frac{\left( O_j - np_j \right)^2}{n p_j},\] and under the null hypothesis \(H_0\) that the observed data follow the fitted distribution \(\widehat{f}\), \(\chi^2_{k-1}\) has (approximately (more precisely, asymptotically, i.e., as \(n \rightarrow \infty\).})\index{Asymptotically Valid) a chi-squared distribution with \(k-1\) degrees of freedom. So we’d reject the null hypothesis \(H_0\) of a good fit if the value of the test statistic \(\chi^2_{k-1}\) is “too big” (or inflated as it’s sometimes called). How big is “too big” is determined by the chi-squared tables: for a given size \(\alpha\) of the test, we’d reject \(H_0\) if \(\chi^2_{k-1} > \chi_{k-1,1-\alpha}^2\) where \(\chi_{k-1,1-\alpha}^2\) is the point above which is probability \(\alpha\) in the chi-squared distribution with \(k-1\) degrees of freedom. The other way of stating the conclusion is to give the \(p\)-value of the test, which is the probability above the test statistic \(\chi^2_{k-1}\) in the chi-squared distribution with \(k-1\) degrees of freedom; as usual with \(p\)-values, we reject \(H_0\) at level \(\alpha\) if and only if the \(p\)-value is less that \(\alpha\).

Note that the value of the test statistic \(\chi^2_{k-1}\), and perhaps even the conclusion of the test, depends on the choice of the intervals. How to choose these intervals is a question that has received considerable research, but there’s no generally accepted “right” answer. If there is consensus, it’s that (1) the intervals should be chosen equiprobably, i.e., so that the probability values \(p_j\) of the integrals are equal to each other (or at least approximately so), and (2) so that \(np_j\) is at least (about) 5 for all intervals \(j\) (the latter condition is basically to discourage use of the chi-squared goodness-of-fit test if the sample size is quite small). One way to find the endpoints of equiprobable intervals is to set \(x_j = \widehat{F}^{-1}(j/k)\), where \(\widehat{F}\) is the CDF of the fitted distribution, and the superscript \(-1\) denotes the functional inverse (not the arithmetic reciprocal); this entails solving the equation \(\widehat{F}(x_j) = j/k\) for \(x_j\), which may or may not be straightforward, depending on the form of \(\widehat{F}\), and so may require use of a numerical-approximation root-finding algorithm like the secant method or Newton’s method.

The chi-squared goodness-of-fit test can also be applied to fitting a discrete distribution to a data set whose values must be discrete (like batch sizes). In the foregoing discussion, \(p_j\) is just replaced by the sum of the fitted PMF values within the \(j\)th interval, and the procedure is the same. Note that for discrete distributions it will generally not be possible to attain exact equiprobability on the choice of the intervals.

6.1.5.2 Kolmogorov-Smirnov Goodness-of-Fit Test

While chi-squared tests amount to comparing the fitted PDF (or PMF in the discrete case) to an empirical PDF or PMF (a histogram), Kolmogorov-Smirnov (K-S) tests compare the fitted CDF to a certain empirical CDF defined directly from the data. There are different ways to define empirical CDFs, but for this purpose we’ll use \(F_{\mbox{emp}}(x)\) = the proportion of the observed data that are \(\leq x\), for all \(x\); note that this is a step function that is continuous from the right, with a step of height \(1/n\) occurring at each of the (ordered) observed data values. As before, let \(\widehat{F}(x)\) be the CDF of a particular fitted distribution. The K-S test statistic is then the largest vertical discrepancy between \(F_{\mbox{emp}}(x)\) and \(\widehat{F}(x)\) along the entire range of possible values of \(x\); expressed mathematically, this is \[V_n = \sup_x \left|F_{\mbox{emp}}(x) - \widehat{F}(x)\right|,\] where “sup” is the supremum, or the least upper bound across all values of \(x\). The reason for not using the more familiar max (maximum) operator is that the largest vertical discrepancy might occur just before a “jump” of \(F_{\mbox{emp}}(x)\), in which case the supremum won’t actually be attained exactly at any particular value of \(x\).

A finite (i.e., computable) algorithm to evaluate the K-S test statistic \(V_n\) is given in (Law 2015). Let \(X_1, X_2, \ldots, X_n\) denote the observed sample, and for \(i = 1, 2, \ldots, n\) let \(X_{(i)}\) denote the \(i\)th smallest of the data values (so \(X_{(1)}\) is the smallest observation and \(X_{(n)}\) is the largest observation); \(X_{(i)}\) is called the \(i\)th order statistic of the observed data. Then the K-S test statistic is \[V_n = \max\left\{\ \ \max_{i = 1, 2, \ldots, n}\left[ \frac{i}{n} - \widehat{F}(X_{(i)}) \right],\ \ \max_{i = 1, 2, \ldots, n}\left[ \widehat{F}(X_{(i)}) - \frac{i-1}{n} \right]\ \ \right\}. \]

It’s intuitive that the larger \(V_n\), the worse the fit. To decide how large is too large, we need tables or reference distributions for critical values of the test for given test sizes \(\alpha\), or to determine \(p\)-values of the test. A disadvantage of the K-S test is that, unlike the chi-squared test, the K-S test needs different tables (or reference distributions) for different hypothesized distributions and different sample sizes \(n\) (which is why we include \(n\) in the notation \(V_n\) for the K-S test statistic); see (Gleser 1985) and (Law 2015) for more on this point. Distribution-fitting packages like Stat::Fit include these reference tables as built-in capabilities that can provide \(p\)-values for K-S tests. Advantages of the K-S test over the chi-squared test are that it does not rely on a somewhat arbitrary choice of intervals, and it is accurate for small sample sizes \(n\) (not just asymptotically, as the sample size \(n \rightarrow \infty\)).

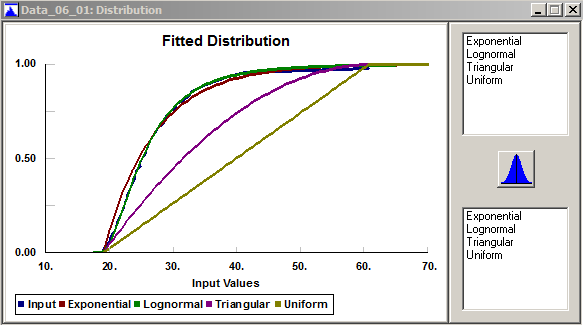

In Stat::Fit, the menu path Fit \(\rightarrow\) Result Graphs \(\rightarrow\) Distribution produces the plot in Figure 6.11with the empirical CDF in blue, and the CDFs of various fitted distributions in different colors per the legend at the bottom; adding/deleting fitted distributions works as in Figure 6.9. While the K-S test statistic is not shown in Figure 6.11 (for each fitted distribution, imagine it as the height of vertical bar at the worst discrepancy between the empirical CDF and that fitted distribution), it’s easy to see that the uniform and triangular CDFs are very poor matches to the empirical CDF in terms of the largest vertical discrepancy, and that the lognormal is a much better fit (in fact, it’s difficult to distinguish it from the empirical CDF in Figure 6.11).

Figure 6.11: Stat::Fit overlays of the fitted CDFs over the empirical distribution.

6.1.5.3 P-P Plots

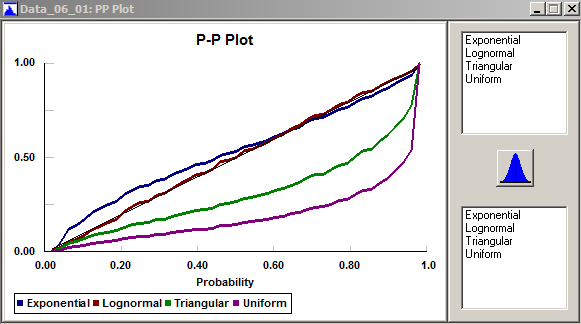

Let’s assume for the moment that the CDF \(\widehat{F}\) of a fitted distribution really is a good fit to the true underlying unknown CDF of the observed data. If so, then for each \(i = 1, 2, \ldots, n\), \(\widehat{F}(X_{(i)})\) should be close to the empirical proportion of data points that are at or below \(X_{(i)}\). That proportion is \(i/n\), but just for computational convenience we’d rather stay away from both 0 and 1 in that proportion (for fitted distributions with an infinite tail), so we’ll make the small adjustment and use \((i-1)/n\) instead for this empirical proportion. To check out heuristically (not a formal hypothesis test) whether \(\widehat{F}\) actually is a good fit to the data, we’ll gauge whether \((i-1)/n \approx \widehat{F}(X_{(i)})\) for \(i = 1, 2, \ldots, n\), by plotting the points \(\left((i-1)/n, \widehat{F}(X_{(i)})\right)\) for \(i = 1, 2, \ldots, n\); if we indeed have a good fit then these points should fall close to a diagonal straight line from \((0, 0)\) to \((1, 1)\) in the plot. Since both the \(x\) and \(y\) coordinates of these points are probabilities (empirical and fitted, respectively), this is called a probability-probability plot, or a P-P plot.

In Stat::Fit, P-P plots are available via the menu path Fit \(\rightarrow\) Result Graphs \(\rightarrow\) PP Plot, to produce Figure 6.12 for our 47-point data set and our four trial fitted distributions. As in Figures 6.9 and 6.11, you can add fitted distributions by clicking them in the upper right box, and remove them by clicking them in the lower right box. The P-P plot for the lognormal fit appears quite close to the diagonal line, signaling a good fit, and the P-P plots for the triangular and uniform fits are very far from the diagonal, once again signaling miserable fits for those distributions. The exponential fit appears not unreasonable, though not as good as the lognormal.

Figure 6.12: Stat::Fit P-P plots.

6.1.5.4 Q-Q Plots

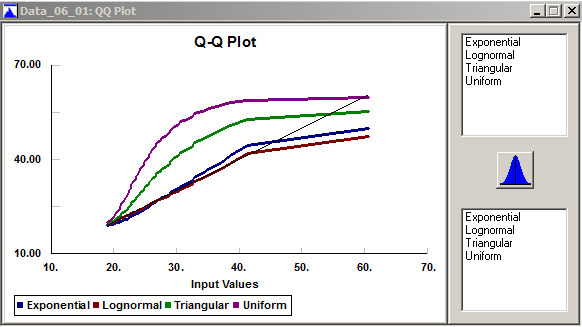

The idea in P-P plots was to see whether \((i-1)/n \approx \widehat{F}(X_{(i)})\) for \(i = 1, 2, \ldots, n\). If we apply \(\widehat{F}^{-1}\) (the functional inverse of the CDF \(\widehat{F}\) of the fitted distribution) across this approximate equality, we get \(\widehat{F}^{-1}\left((i-1)/n\right) \approx X_{(i)}\) for \(i = 1, 2, \ldots, n\), since \(\widehat{F}^{-1}\) and \(\widehat{F}\) “undo” each other. Here, the left-hand side is a quantile of the fitted distribution (value below which is a probability or proportion, in this case \((i-1)/n\)). Note that a closed-form formula for \(\widehat{F}^{-1}\) may not be available, mandating a numerical approximation. So if \(\widehat{F}\) really is a good fit to the data, and we plot the points \(\left(\widehat{F}^{-1}\left((i-1)/n\right), X_{(i)}\right)\) for \(i = 1, 2, \ldots, n\), we should again get an approximately straight diagonal line, but not between \((0, 0)\) and \((1, 1)\), but rather between \(\left(X_{(1)}, X_{(1)}\right)\) and \(\left(X_{(n)}, X_{(n)}\right)\). (Actually, Stat::Fit reverses the order and plots the points \(\left(X_{(i)}, \widehat{F}^{-1}\left((i-1)/n\right)\right)\), but that doesn’t change the fact that we’re looking for a straight line.) Now, both the \(x\) and \(y\) coordinates of these points are quantiles (fitted and empirical, respectively), so this is called a quantile-quantile plot, or a Q-Q plot.

You can make Q-Q plots in Stat::Fit via Fit \(\rightarrow\) Result Graphs \(\rightarrow\) QQ Plot, to produce Figure 6.13 for our data and our fitted distributions. As in Figures 6.9, 6.11, and 6.12, you can choose which fitted distributions to display. The lognormal and exponential Q-Q plots appear to be the closest to the diagonal line, signaling a good fit, except in the right tail where neither does well. The Q-Q plots for the triangular and uniform distributions indicate very poor fits, consistent with our previous findings. According to (Law 2015), Q-Q plots tend to be sensitive to discrepancies between the data and fitted distributions in the tails, whereas P-P plots are more sensitive to discrepancies through the interiors of the distributions.

Figure 6.13: Stat::Fit Q-Q plots.

6.1.6 Distribution-Fitting Issues

In this section we’ll briefly discuss a few issues and questions that often arise when trying to fit distributions to observed data.

6.1.6.1 Are My Data IID? What If They Aren’t?

A basic and important assumption underlying all the distribution-fitting methods and goodness-of-fit testing discussed in Sections 6.1.3–6.1.5 is that the real-world data observed for a model input are IID: Independent and Identically Distributed. As a point of logic, this is really two assumptions, both of which must hold if the methods in Sections 6.1.3-6.1.5 are to be valid:

“I”: Each data point is probabilistically/statistically independent of any other data point in your data set. This might be suspect if the data were sequentially collected on a process over time (as might often be the case) where an observation has some kind of relationship with the next or later observations, either a “physical” causal relationship or an apparent statistical-correlation relationship.

“ID”: The underlying probability distribution or process giving rise to the data is the same for each data point. This might be suspect if the conditions during data collection changed in a manner that could affect the data’s underlying distribution, or if there is heterogeneity in the data set in terms of the sources of the observations.

It’s always good to have a contextual understanding of the system being modeled, and of the way in which the data were collected. While there are formal statistical tests for both the “I” and “ID” in IID, we’ll focus on a few simple graphical methods to check these assumptions informally, and where possible, suggest courses of action if either assumptions appears unwarranted from the data.

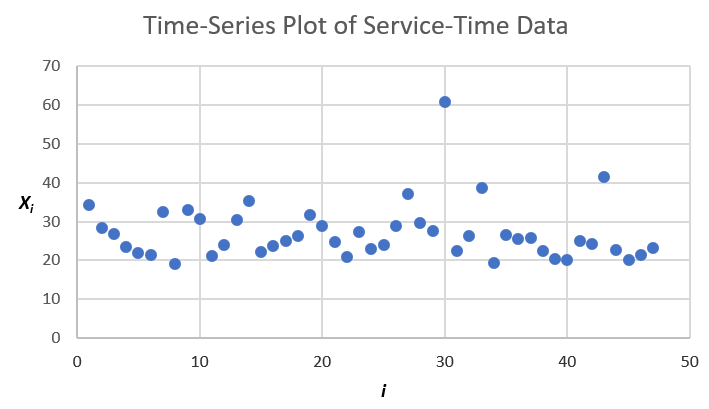

As before, let \(X_1, X_2, \ldots, X_n\) denote the observed data, but now suppose that the subscript \(i\) is the time-order of observation, so \(X_1\) is the first observation in time, \(X_2\) is the second observation in time, etc. An easy first step to assess IID-ness is simply to make a time-series plot of the data, which is just a plot of \(X_i\) on the vertical axis vs. the corresponding \(i\) on the horizontal axis; see Figure 6.14, which is for the 47 service times we considered in Sections 6.1.1–6.1.5. This plot is in the Excel spreadsheet file Data_06_02.xls; the original data from Data_06_01.xls are repeated in column B, with the time-order of observation \(i\) added in column A (ignore the other columns for now). For IID data, this plot should look rather like an amorphous and uninteresting cloud of points, without apparent visible persistent trends up or down or periodicities (which could happen if the “ID” is violated and the distribution is drifting or cycling over time), or strong systematic connections between successive points (which could happen if the “I” is violated), which seems to be the case for these data.

Figure 6.14: Time-series plot of the 47 service times.

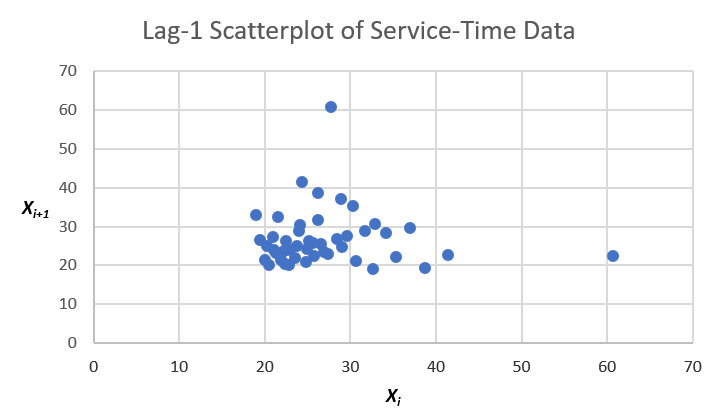

Another easy plot, more specifically aimed at detecting non-independence between adjacent observations in the data (called lag-1 in the time series of collection), is a scatter plot of the adjacent pairs \((X_i, X_{i+1})\) for \(i = 1, \ldots, n-1\); for the 47 service times see Figure 6.15, which is also in Data_06_02.xls (to make this plot in Excel we needed to create the new column C for \(X_{i+1}\) for \(i = 1, \ldots, n-1\)). If there were a lag-1 positive correlation the points would cluster around an upward-sloping line (negative correlation would result in a cluster around a downward-sloping line), and independent data would not display such clustering, as appears to be the case here. Stat::Fit can also make such lag-1 scatterplots of a data set via the menu path Statistics \(\rightarrow\) Independence \(\rightarrow\) Scatter Plot. If you want to check for possible non-independence at lag \(k > 1\), you can of course make similar plots except plotting the pairs \((X_i, X_{i+k})\) for \(i = 1, \ldots, n-k\) (Stat::Fit doesn’t do this, but you could fashion your own plots in Excel).

Figure 6.15: Scatter plot (at Lag 1) for the 47 service times.

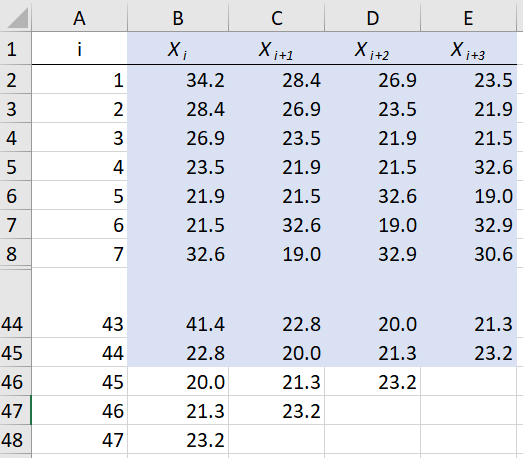

Time-series and scatter plots can provide good intuitive visualization of possible lack of independence, but there are numerical diagnostic measures as well. Perhaps most obvious is to construct a correlation matrix showing the numerical autocorrelation (_auto_correlation since it’s within the same data sequence) estimates at various lags; we’ll do lags 1 through 3. Most standard statistical-analysis packages can do this automatically, but we can do it in Excel as well. Figure 6.16 shows columns A–E of Data_06_02.xls, except that rows 9–43 are hidden for brevity, and you can see that columns C, D, and E are the original data from column B except shifted up by, respectively, 1, 2, and 3 rows (representing lags in the time-order of observation).

Figure 6.16: Data-column arrangement for autocorrelation matrix in data file.

To make the autocorrelation matrix, use the Excel Data Analysis package, via the Data tab at the top and then the Analyze area on the right (if you don’t see the Data Analysis icon there, you need to load the Analysis ToolPak add-in via the File tab at the top, then Options on the bottom left to get the Excel Options window, then Add-ins on the left menu, and finally Manage Excel Add-ins at the bottom). Once you’re in the Data Analysis window, select Correlation, then OK, then select the input range $B$1:$E$45 (the blue-shaded cells, noting that we had to omit the last three rows since we want autocorrelations through lag 3), check the box for Labels in First Row, and then where you’d like the output to go (we selected Output Range $G$37). Figure 6.17 shows the results.

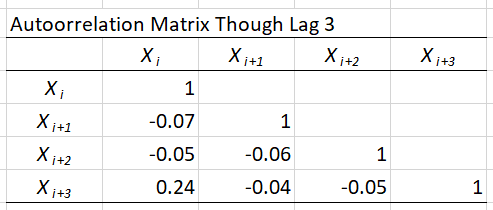

Figure 6.17: Autocorrelation matrix in data file.

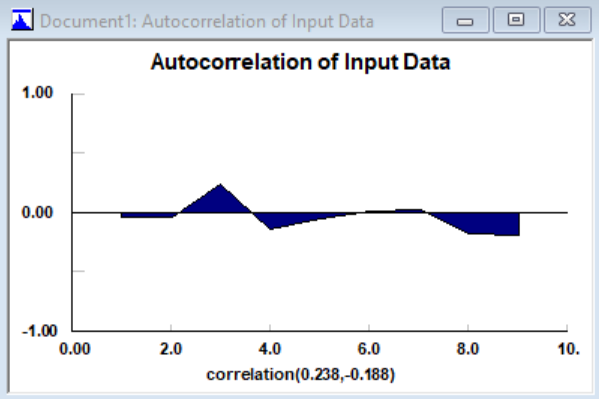

There are actually three estimates of the lag-1 autocorrelation (down the diagonal from upper left to lower right), \(-0.07\), \(-0.06\), and \(-0.05\) that are very close to each other since they are using almost the same data (except for a few data points at the ends of the sequence), and are all very small (remember that correlations always fall between \(-1\) and \(+1\)). Similarly, there are two estimates of the lag-2 autocorrelation, \(-0.05\) and \(-0.04\), again very close to each other and very small. The single estimate of the lag-3 autocorrelation, \(+0.24\), might indicate some positive relation between data points three observations apart, but it’s weak in any case (we’re not addressing here whether any of these autocorrelation estimates are statistically significantly different from zero). A visual depiction of the autocorrelations is possible via a plot (called a correlogram, or in this case within a single data sequence an autocorrelogram) with the lag (1, 2, 3, etc.) on the horizontal axis and the corresponding lagged autocorrelation on the vertical axis; Stat::Fit does this via the menu path Statistics \(\rightarrow\) Independence \(\rightarrow\) Autocorrelation, with the result in Figure 6.18 indicating the same thing as did the autocorrelation matrix.

Figure 6.18: Autocorrelation plot via Stat::Fit in data file.

There are also many formal statistical hypothesis tests for independence within a data set. Among these are various kinds of runs tests, that look in a data sequence for runs of the data, which are a subsequences of data points that go only up (or down), and in truly independent data the expected length and frequency of such runs can be computed, against which the length and frequency of runs in the data are compared for agreement (or not). Stat::Fit supports two other runs tests (above/below median and turning points), via the menu path Statistics \(\rightarrow\) Independence \(\rightarrow\) Runs Test (we’ll leave it to you to try this out).

So it appears that the independence assumption for our 47 service times is at least plausible, having found no strong evidence to the contrary. Finding that your data are clearly not independent mandates some alternative course of action on your part, either in terms of modeling or further data collection. One important special case of this in simulation is a time-varying arrival pattern, which we discuss below in Section 6.2.3, and modeling this is well-supported by Simio. But in general, modeling non-independent input process in simulation is difficult, and beyond the scope of this book; the interested reader might peruse the time-series literature (such as (Box, Jenkins, and Reinsel 1994)), for examples of methods for fitting various kinds of autoregressive processes, but generating them is generally not supported in simulation software.

Having focused on the “I” (Independent) assumption so far, let’s now consider the “ID” (Identically Distributed) assumption. Deviations from this assumption could be due to a heterogeneous data set, such as service times provided by several different operators with different levels of training and skill. This is sometimes detected by noting several distinct modes (peaks) in the histogram of all the service times merged together. A good way to deal with this is to try to track down which service times were provided by which operator, and then alter the simulation model to have distinct server resources, with different distributions for service times, and use appropriate and different distributions for their service times depending on their training or skill. Another example is in simulation of a hospital emergency department, where patients arrive with different acuities (severities) in their conditions, and the various service times during their stay could well depend on their acuity, so we would need different service-time distributions for different acuities. Deviations from the “ID” assumption could also happen if the distribution changes over time; in this case we could try to identify the change points (either from physical knowledge of the system, or from the data themselves) and alter the simulation model to use different distributions during different time periods.

6.1.6.2 What If Nothing Fits?

The process just described in Section 6.1.4 is a kind of best-case scenario, and things don’t always go so smoothly. It can definitely happen that, despite your (and Stat::Fit’s) best efforts to find a standard distribution that fits your data, alas, all your \(p\)-values are small and you reject all your distributions’ fits. Does this mean that your data are unacceptable or have somehow failed? No, on the contrary, it means that the “standard” list of distributions fails to have an entry that accommodates your data (which, after all, are “The Truth” about the phenomenon being observed, like service times — the burden is on the distributions to mold themselves to your data, not the other way around).

In this case, Stat::Fit can produce an empirical distribution, which is basically just a version of the histogram, and variates can be generated from it in the Simio simulation. On the Stat::Fit menus, do File \(\rightarrow\) Export \(\rightarrow\) Export Empirical, and select the Cumulative radio button (rather than the default Density button) for compatibility with Simio. This will copy onto the Windows clipboard a sequence of pairs \(v_i, c_i\) where \(v_i\) is the \(i\)th smallest of your data values, and \(c_i\) is the cumulative probability of generating a variate that is less than or equal to the corresponding \(v_i\). Exactly what happens after you copy this information into Simio depends on whether you want a discrete or continuous distribution. In the Simio Reference Guide (F1 or ? icon from within Simio), follow the Contents-tab path Modeling in Simio \(\rightarrow\) Expression Editor, Functions and Distributions \(\rightarrow\) Distributions, then select either Discrete or Continuous according to which you want:

If Discrete, the proper Simio expression is

Random.Discrete(v1, c1, v2, c2, ...)where the sequence of pairs can go on as long as needed according to your data set, and you’ll generate each value \(v_i\) with cumulative probability \(c_i\) (e.g., the probability is \(c_4\) that you’ll generate a value less than or equal to \(v_4\), so you’ll generate a value equal to \(v_4\) with probability \(c_4 - c_3\)).If Continuous, the Simio expression is

Random.Continuous(v1, c1, v2, c2, ...), and the CDF from which you’ll generate will pass through each \((v_i, c_i)\) and will connect them with straight lines, so it will be a piecewise-linear “connect-the-dots” CDF rising from 0 at \(v_1\) to 1 at the largest (last) \(v_i\).

Note that in both the discrete and continuous cases, you’ll end up with a distribution that’s bounded both below (by your minimum data point) and above (by your maximum data point), i.e., it does not have an infinite tail in either direction.

Another approach to the “nothing fits” problem is afforded by Simio’s feature that allows drawing samples directly from a data table. Essentially you import the entire set of sample data into a Simio data table, then use an Input Parameter to draw random samples from the table. Refer to Section 6.5 to learn more about using Input Parameters based on Table Values.

6.1.6.3 What If I Have No Data?

Obviously, this is not a good situation. What people usually do is ask an “expert” familiar with the system (or similar systems) for, say, lowest and highest values that are reasonably possible, which then specify a uniform distribution. If you feel that uniform gives too much weight toward the extremes, you could instead use a triangular distribution with a mode (peak of the PDF, not necessarily the mean) that may or may not be in the middle of the range, depending on the situation. Once you’ve done such things and have a model working, you really ought to use your model as a sensitivity-analysis tool (see “What’s the Right Amount of Data?” just below) to try to identify which inputs matter most to the output, and then try very hard to get some data on at least those important inputs, and try to fit distributions. Simio’s Input Parameters feature, discussed in Section 6.5, may also provide guidance on which inputs matter most to key output performance metrics.

6.1.6.4 What If I Have “Too Much” Data?

Another extreme situation is when you have a very large sample size of observed real-world data of maybe thousands. Usually we’re happy to have a lot of data, and really, we are here too. We just have to realize that, with a very large sample size, the goodness-of-fit hypothesis tests will have high statistical power (probability of rejecting the null hypothesis when it’s really false, which strictly speaking, it always is), so it’s likely that we’ll reject the fits of all distributions, even though they may look perfectly reasonable in terms of the eyeball test. In such cases, we should remember that goodness-of-fit tests, like all hypothesis tests, are far from perfect, and we may want to go ahead and use a fitted distribution anyway even if goodness-of-fit tests reject it with a large sample size, provided that it passes the eyeball test.

6.1.6.5 What’s the Right Amount of Data?

Speaking of sample size, people often wonder how much real-world data they need to fit a distribution. Of course, there’s no universal answer possible to such a question. One way to address it, though, is to use your simulation model itself as a sensitivity-analysis tool to gauge how sensitive some key outputs are to changes in your input distributions. Now you’re no doubt thinking “but I can’t even build my model if I don’t know what the input distributions are,” and strictly speaking, you’re right. However, you could build your model first, before even collecting real-world data to which to fit input probability distributions, and initially just use made-up input distributions — not arbitrary or crazy distributions, but in most cases you can make a reasonable guess using, say, simple uniform or triangular input distributions and someone’s general familiarity with the system. Then vary these input distributions to see which ones have the most impact on the output — those are your critical input distributions, so you might want to focus your data collection there, rather than on other input distributions that seem not to affect the output as much. As in the no-data case discussed above, Simio’s Input Parameters feature in Section 6.5 may also provide guidance as to how much relative effort should be expended collecting real-world data on your model’s various inputs.

6.1.6.6 What’s the Right Answer?

A final comment is that distribution specification is not an exact science. Two people can take the same data set and come up with different distributions, both of which are perfectly reasonable, i.e., provide adequate fits to the data, but are different distributions. In such cases you might consider secondary criteria, such as ease of parameter manipulation to effect changes in the distributions’ means. If that’s easier with one distribution than the other, you might go with the easier one in case you want to try different input-distribution means (e.g., what if you had a server that was 20% faster on average?).

6.2 Types of Inputs

Having discussed univariate distribution fitting in Section 6.1, we should now take a step back and think more generally about all the different kinds of numerical inputs that go into a simulation model. We might classify these along two dimensions in a \(2 \times 3\) classification: deterministic vs. stochastic, and scalar vs. multivariate vs. stochastic processes.

6.2.1 Deterministic vs. Stochastic

Deterministic inputs are just constants, like the number of automated check-in kiosks a particular airline has at a particular airport. This won’t change during the simulation run — unless, of course, the kiosks are subject to breakdowns at random points in time, and then have to undergo repair that lasts a random amount of time. Another example of deterministic input might be the pre-scheduled arrival times of patients to a dental office.

But wait, have you never been late (or early) to a dental appointment? So arrival times might be more realistically modeled as the (deterministic) scheduled time, plus a deviation RV that could be positive for a late arrival and negative for an early arrival (and maybe with expected value zero if we assume that patients are, on average, right on time, even if not in every case). This would be an example of a stochastic input, which involves (or just is) a RV. Actually, for this sort of a situation it’s more common to specify a distribution for the interarrival times, as we did in the spreadsheet-imprisoned queueing Model 3-3. Note that Simio provides exactly this feature with a Source object using an Arrival Type of Arrival Table.

Often, a given input to a simulation might arguably be either deterministic or stochastic:

The walking time of a passenger in an airport from the check-in kiosk to security. The distances are the same for everybody, but walking speeds clearly vary.

The number of items actually in a shipment, as opposed to the (deterministic) number ordered.

The time to stamp and cut an item at a machine in a stamping plant. This could be close to deterministic if the sheet metal off the roll is pulled through at a constant rate, and the raising/dropping of the stamping die is at a constant rate. Whether to try to model small variations in this using RVs would be part of the question of level of detail in model building (by the way, more detail doesn’t always lead to a “better” model).

The time to do a “routine” maintenance on a military vehicle. While what’s planned could be deterministic, we might want to model the extra (and random) time needed if more problems are uncovered.

Whether to model an input as deterministic or stochastic is a modeling decision. It’s obvious that you should do whichever matches the real system, but there still could be questions about whether it matters to the simulation output. Note: In Appendix 12 we will see how a given input might be deterministic during the planning phase and stochastic during the risk analysis phase.

6.2.2 Scalar vs. Multivariate vs. Stochastic Processes

If an input is just a single number, be it deterministic or stochastic, it’s a scalar value. Another term for this, used especially if the scalar is stochastic, is univariate. This is most commonly how we model inputs to simulations — one scalar number (or RV) at a time, typically with several such inputs across the model — and typically assumed to be statistically independent of each other. Our discussion in Section 6.1 tacitly assumed that our model is set up this way, with all stochastic inputs being univariate and independent of each other across the model. In a manufacturing model, such scalar univariate inputs could include the time for processing a part, and the time for subsequent inspection of it. And in an urgent-care clinic, such scalar univariate inputs might include interarrival times between successive arriving patients, their age, gender, insurance status, time taken in an exam room, diagnosis code, and disposition code (like go home, go to a hospital emergency room in a private car, or call for an ambulance ride to a hospital).

But there could be relationships between the different inputs across a simulation model, in which case we really should view them as components (or coordinates) of an input vector, rather than being independent; if some of these components are random, then this is called a random vector, or a multivariate distribution. Importantly, this allows for dependence and correlation across the coordinates of the input random vector, making it a more realistic input than if the coordinates were assumed to be independent — and this can affect the simulation’s output. In the manufacturing example of the preceding paragraph, we could allow, say, positive correlation between a given part’s processing and inspection times, reflecting the reality that some parts are bigger or more problematic than are other parts. It would also allow us to prevent generating absurdities in the urgent-care clinic like a young child suffering from arthritis (unlikely), or an elderly man with complications from pregnancy (beyond unlikely), both of which would be possible if all these inputs were generated independently, which is typically what we do. Another example is a fire-department simulation, where the number of fire trucks and ambulances sent out on a given call should be positively correlated (large fires could require several of each, but small fires perhaps just one of each); see (Mueller 2009) for how this was modeled and implemented in one project. While some such situations can be modeled just logically (e.g., for the health-care clinic, first generate gender and then do the obvious check before allowing a pregnancy-complications diagnosis), in other situations we can model the relationships statistically, with correlations or joint probability distributions. If such non-independence is in fact present in the real-world system, it can affect the simulation output results, so ignoring it and just generating all inputs independently across your model can lead to erroneous results.

One way to specify an input random vector is first to fit distributions to each of the component univariate random variables one at a time, as in Section 6.1; these are called the marginal distributions of the input random vector (since in the case of a two-dimensional discrete random vector, they could be tabulated on the margins of the joint-distribution table). Then use the data to estimate the cross correlations via the usual sample-correlation estimator discussed in any statistics book. Note that specifying the marginal univariate distributions and the correlation matrix does not necessarily completely specify the joint probability distribution of the random vector, except in the case of jointly distributed normal random variables.

Stepping up the dimensionality of the input random vector to an infinite number of dimensions, we could think of a (realization of) a stochastic process as being an input driving the simulation model. Models of telecommunications systems sometimes do this, with the input stochastic process representing a stream of packets, each arriving at a specific time, and each being of a specific size; see (Biller and Nelson 2005) for a robust method to fit a very general time-series model for use as an input stream to a simulation.

For more on such “nonstandard” simulation input modeling, see (Kelton 2006) and (Kelton 2009), for example.

6.2.3 Time-Varying Arrival Rate

In many queueing systems, the arrival rate from the outside varies markedly over time. Examples come to mind like fast-food restaurants over a day, emergency rooms over a year (flu season), and urban freeway exchanges over a day. Just as ignoring correlation across inputs can lead to errors in the output results, so too can ignoring nonstationarity in arrivals. Imagine how the freeway exchange as a flat average arrival rate over a 24-hour day, including the arrival rate around 3:00 a.m., would likely badly understate congestion during rush hours (see (Harrod and Kelton 2006) for a numerical example of the substantial error that this produces).

The most common way of representing a time-varying arrival rate is via a nonstationary Poisson process, (also called a non-homogeneous Poisson process). Here, the arrival rate is a function \(\lambda(t)\) of simulated time \(t\), instead of constant flat rate \(\lambda\). The number of arrivals during any time interval \([a, b]\) follows a (discrete) Poisson distribution with mean \(\int_a^b \lambda(t) dt\). Thus, the mean number of arrivals is higher during time intervals where the rate function \(\lambda(t)\) is higher, as desired (assuming equal-duration time intervals, of course). Note that if the arrival rate actually is constant at \(\lambda\), this specializes to a stationary Poisson process at rate \(\lambda\); this is equivalent to an arrival process with interarrival times that are IID exponential RVs with mean \(1/\lambda\).

Of course, to model a nonstationary Poisson process in a simulation, we need to decide how to use observed data to specify an estimate of the rate function \(\lambda(t)\). This is a topic that’s received considerable research, for example (Kuhl, Sumant, and Wilson 2006) and the references there. One straightforward way to estimate \(\lambda(t)\) is via a piecewise-constant function. Here we assume that, for time intervals of a certain duration (let’s arbitrarily say ten minutes in the freeway-exchange example to make the discussion concrete), the arrival rate actually is constant, but it can jump up or down to a possibly-different level at the end of each ten-minute period. You’d need to have knowledge of the system to know that it’s reasonable to assume a constant rate within each ten-minute period. To specify the level of the rate function over each ten-minute period, just count arrivals during that period, and hopefully average over multiple weekdays for each period separately, for better precision (the arrival rate within an interval need not be an integer). While this piecewise-constant rate-estimation method is relatively simple, it has good theoretical backup, as shown in (Leemis 1991).

Simio supports generating arrivals from such a process in its Source object, where entities arrive to the system, by specifying its Arrival Mode to be Time Varying Arrival Rate. The arrival-rate function is specified separately in a Simio Rate Table. Section 7.3 provides a complete example of implementing a nonstationary Poisson arrival process with a piecewise-constant arrival rate in this way, for a hospital emergency department. Note that in Simio, all rates must be in per-hour units before entering them into the corresponding Rate Table; so if your arrival-rate data are on the number of arrivals during each ten-minute period, you’d first need to multiply these rate estimates for each period by 6 to convert them to per-hour arrival rates.

6.3 Random-Number Generators

Every stochastic simulation must start at root with a method to “generate” random numbers, a term that in simulation specifically means observations uniformly and continuously distributed between 0 and 1; the random numbers also need to be independent of each other. That’s the ideal, and cannot be literally attained. Instead, people have developed numerical algorithms to produce a stream of values between 0 and 1 that appear to be independent and uniformly distributed. By appear we mean that the generated random numbers satisfy certain provable theoretical conditions (like they’ll not repeat themselves for a very, very long time), as well as pass batteries of tests, both statistical and theoretical, for uniformity and independence. These algorithms are known as random-number generators (RNGs).

You can’t just think up something strange and expect it to work well as a random-number generator. In fact, there’s been a lot of research on building good RNGs, which is much harder than most people think. One classical (though outmoded) method is called the linear congruential generator (LCG), which generates a sequence of integers \(Z_i\) based on the recurrence relation

\[Z_i = (aZ_{i-1} + c) (\textrm{mod}\ m)\]

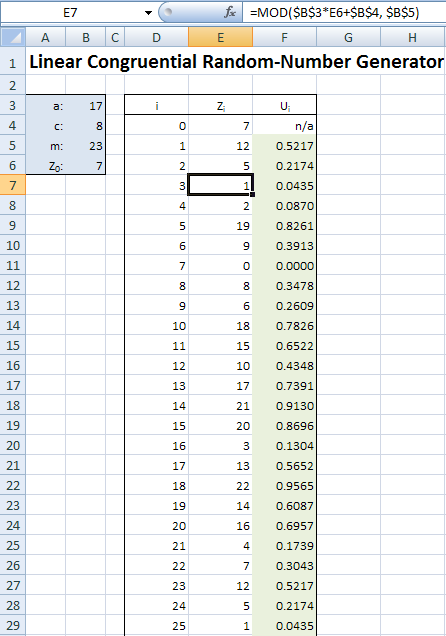

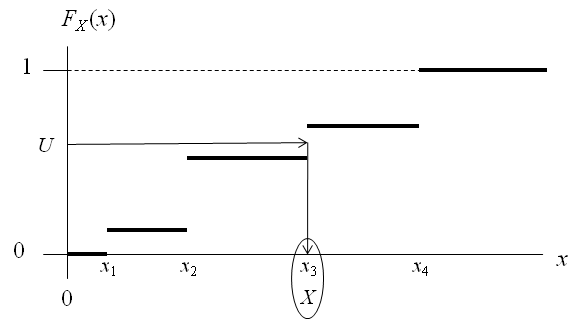

where \(a\), \(c\), and \(m\) are non-negative integer constants (\(a\) and \(m\) must be \(>0\)) that need to be carefully chosen, and we start things off by specifying a seed value \(Z_0 \in \{0, 1, 2, \ldots, m-1\}\). Note that mod \(m\) here means to divide \((aZ_{i-1} + c)\) by \(m\) and set \(Z_i\) to be the remainder of this division (you may need to think back to before your age hit double digits to remember long division and remainders). Because it’s a remainder of a division by \(m\), each \(Z_i\) will be an integer between 0 and \(m-1\), so since we need our random numbers \(U_i\) to be between 0 and 1, we let \(U_i = Z_i/m\); you could divide by \(m-1\) instead, but in practice \(m\) will be quite large so it doesn’t much matter. Figure 6.19 shows part of the Excel spreadsheet Model_06_01.xls (which is available for download as described in Appendix C) that implements this for the first 100 random numbers; Excel has a built-in function =MOD that returns the remainder of long division in column F, as desired.

Figure 6.19: A linear congruential random-number generator implemented in Excel file

The LCG’s parameters \(a\), \(c\), \(m\), and \(Z_0\) are in cells B3..B6, and the spreadsheet is set up so that you can change them to other values if you’d like, and the whole spreadsheet will update itself automatically. Looking down at the generated \(U_i\) values in column F, it might appear at first that they seem pretty, well, “random” (whatever that means), but looking a little closer should disturb you. Our seed was \(Z_0 = 7\), and it turns out that \(Z_{22}=7\) as well, and after that, the sequence of generated numbers just repeats itself as from the beginning, and in exactly the same order. Try changing \(Z_0\) to other values (we dare you) to try to prevent your seed from reappearing so quickly. As you’ll find out, you can’t. The reason is, there are only so many integer remainders possible with the mod \(m\) operation (\(m\), in fact) so you’re bound to repeat by the time you generate your \(m\)th random number (and it could be sooner than that depending on the values of \(a\), \(c\), and \(m\)); this is called cycling of RNGs, and the length of a cycle is called its period. Other, less obvious issues with LCGs, even if they had long periods, is the uniformity and independence appearance we need, which are actually more difficult to achieve and require some fairly deep mathematics involving prime numbers, relatively prime numbers, etc. (number theory).

Several relatively good LCGs were created (i.e., acceptable values of \(a\), \(c\), and \(m\) were found) and used successfully for many years following their 1951 development in (Lehmer 1951), but obviously with far larger values of \(m\) (often \(m = 2^{31} - 1 = 2,147,483,547\), about 2.1 billion or on the order of \(10^9\)). However, computer speed has come a long way since 1951, and LCGs are no longer really serious candidates for high-quality RNGs; for one thing, an LCG with a period even as high as \(10^9\) can be run through its whole cycle in just a few minutes on common personal computers today. So other, different methods have been developed, though many still using the modulo remainder operation internally at points, with far longer periods and much better statistical properties (independence and uniformity). We can’t begin to describe them here; see (L’Ecuyer 2006) for a survey of these methods, and (L’Ecuyer and Simard 2007) for testing RNGs.

Simio’s RNG is the Mersenne twister (Matsumoto and Nishimura 1998) and it has a truly astronomical cycle length (\(10^{6001}\), and by comparison, it’s estimated that the observable universe contains about \(10^{80}\) atoms). And just as importantly, it has excellent statistical properties (tested independence and uniformity up to 632 dimensions). So in Simio, you at least have two fewer things to worry about — running out of random numbers, and generating low-quality random numbers.

As implemented in Simio, the Mersenne twister is divided into a huge number of hugely long streams, which are subsegments of the entire cycle, and it’s essentially impossible for any two streams to overlap. While you can’t access the seed (it’s actually a seed vector), you don’t need to since you can, if you wish, specify the stream to use, as an extra parameter in the distribution specification. For instance, if you wanted to use stream 28 (rather than the default stream 0) for the shifted lognormal that Stat::Fit fitted to our service-time data in Section 6.1, you’d enter 17.4+Random.Lognormal(2.04, 0.672, 28).

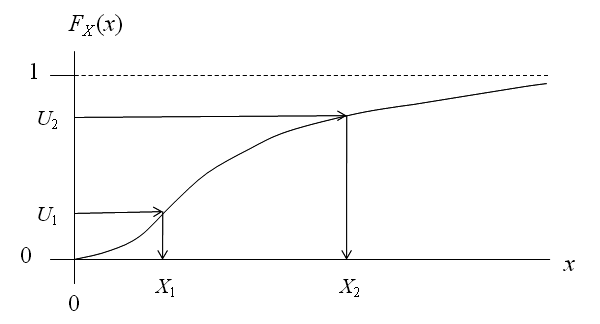

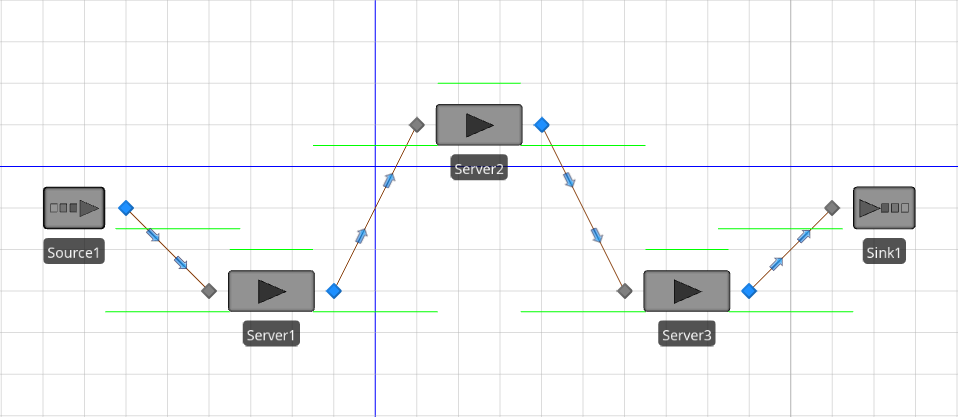

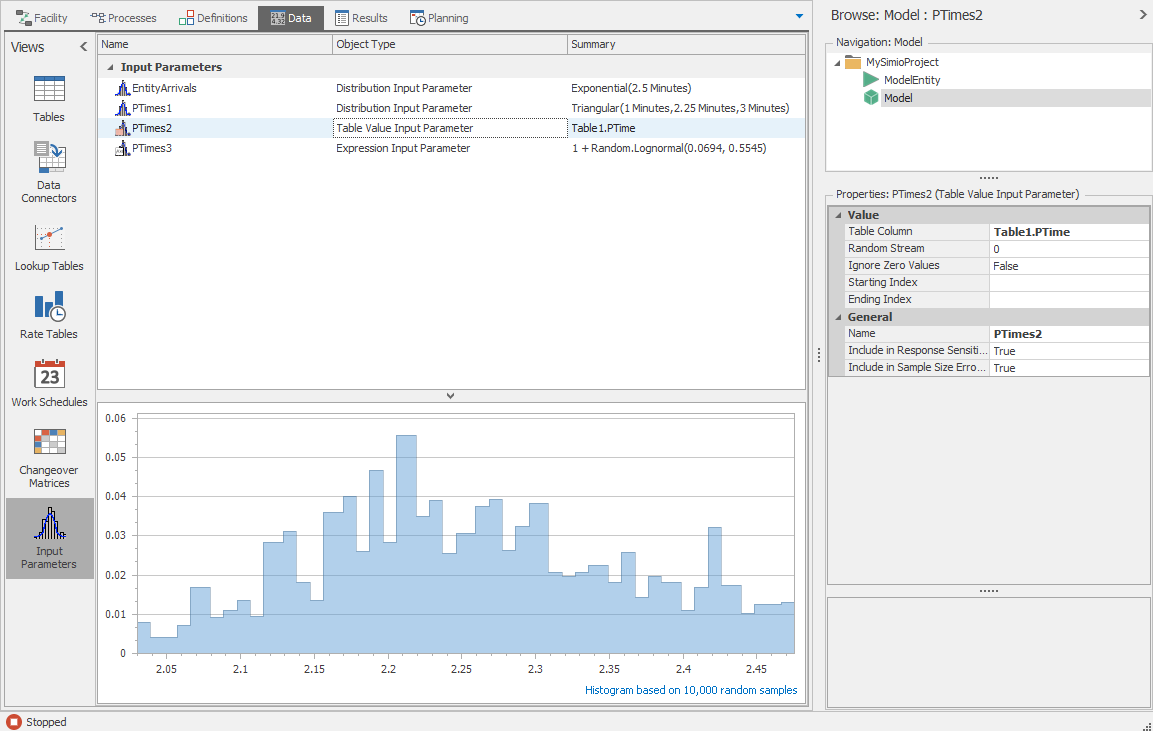

Why would you ever want to do this? One good reason is if you’re comparing alternative scenarios (say, different plant layouts), you’d like to be more confident that output differences you see are due to the differences in the layouts, and not due to just having gotten different random numbers. If you dedicate a separate stream to each input distribution in your model, then when you simulate all the plant-layout scenarios you’re doing a better job of synchronizing the random-number use for the various inputs across the different scenarios, so that there’s a better chance that each layout scenario will “see” the same jobs coming at it at the same times, the processing requirements for the jobs will be the same across the scenarios, etc. This way, you’ve at least partially removed “random bounce” as an explanation of the different results across the alternative scenarios.